Compare commits

130 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

b276b44ded | ||

|

|

3792649ed9 | ||

|

|

5f08956605 | ||

|

|

643d89ed7b | ||

|

|

8ca459593c | ||

|

|

ee4208cc19 | ||

|

|

f6330e4bb8 | ||

|

|

4f3cf10a5c | ||

|

|

aa1954e81e | ||

|

|

482597bd0e | ||

|

|

d4b90e681c | ||

|

|

fcf9e43c4c | ||

|

|

b8d8cd19d3 | ||

|

|

536c2d966c | ||

|

|

f49cb0a5ac | ||

|

|

cef6bb2340 | ||

|

|

4c03253b30 | ||

|

|

ed09bb7370 | ||

|

|

5c46a25173 | ||

|

|

d1538f00df | ||

|

|

afa4acbbfb | ||

|

|

d9a530485f | ||

|

|

b2ad70b028 | ||

|

|

f49aae8ab0 | ||

|

|

f6debcf799 | ||

|

|

edbc4f1359 | ||

|

|

5242f066e7 | ||

|

|

af186800fa | ||

|

|

2bff4a5f44 | ||

|

|

edb73d50cf | ||

|

|

3dc09d55fd | ||

|

|

079fb1b0f5 | ||

|

|

17b2aa8111 | ||

|

|

78d484cfe0 | ||

|

|

182e12817d | ||

|

|

9179d57cc9 | ||

|

|

9cb9b746d8 | ||

|

|

57a0089664 | ||

|

|

53f7608872 | ||

|

|

838ce5b45a | ||

|

|

e878b9557e | ||

|

|

6638d37d6f | ||

|

|

4c29e15329 | ||

|

|

48890a2121 | ||

|

|

e312d7e18c | ||

|

|

6c38aaebf3 | ||

|

|

18b0ac44bf | ||

|

|

b6749eff2f | ||

|

|

c73a103280 | ||

|

|

a5d194f730 | ||

|

|

6320436b88 | ||

|

|

87325927ed | ||

|

|

4435cdf5b6 | ||

|

|

b041654611 | ||

|

|

e833dcefd4 | ||

|

|

29680f0ee8 | ||

|

|

670329700c | ||

|

|

62e17af311 | ||

|

|

e3938a2c85 | ||

|

|

49ffc3d99e | ||

|

|

34bb1c34a2 | ||

|

|

b27a56a296 | ||

|

|

ecd7095123 | ||

|

|

d449513861 | ||

|

|

6709e229b3 | ||

|

|

00a8f16262 | ||

|

|

00d16f8615 | ||

|

|

c90676d2b2 | ||

|

|

b89c011ad5 | ||

|

|

c3de31aa88 | ||

|

|

b6df0be865 | ||

|

|

a89f84f76e | ||

|

|

5a916cc221 | ||

|

|

dcf421ff93 | ||

|

|

d655258311 | ||

|

|

f6d13d4318 | ||

|

|

d5350d7c30 | ||

|

|

c63fd04c92 | ||

|

|

64b418a551 | ||

|

|

36c9c84047 | ||

|

|

88b4aaa301 | ||

|

|

eac4c227c9 | ||

|

|

d5eb7d4a55 | ||

|

|

80b15e16e2 | ||

|

|

cfacedbb1a | ||

|

|

c3bab033ed | ||

|

|

524f9bd84f | ||

|

|

4658ede9d6 | ||

|

|

f7b463aca1 | ||

|

|

c1a6e92b1d | ||

|

|

eefb0e427e | ||

|

|

c23d81b740 | ||

|

|

6dac231040 | ||

|

|

6fd3e531c3 | ||

|

|

c1c05991cf | ||

|

|

ab378e14d1 | ||

|

|

c0f9bf9ef5 | ||

|

|

bc6a7b3f20 | ||

|

|

036b0823b9 | ||

|

|

be99595bde | ||

|

|

01836a4b4c | ||

|

|

9f3b3dd773 | ||

|

|

3210b9e752 | ||

|

|

2d66b8bf1d | ||

|

|

f3d6afead1 | ||

|

|

1690b58c67 | ||

|

|

2e8eda5c67 | ||

|

|

74a4dc2533 | ||

|

|

be7ae2b580 | ||

|

|

c1de1bb480 | ||

|

|

a02c1204ce | ||

|

|

258779e234 | ||

|

|

342defca6b | ||

|

|

580aec7d57 | ||

|

|

54b91af76c | ||

|

|

6d9b4efb7e | ||

|

|

6f51f445b7 | ||

|

|

6de9518de7 | ||

|

|

517cc020c7 | ||

|

|

2c699652b0 | ||

|

|

2e4ffc0e79 | ||

|

|

70c91ec858 | ||

|

|

b7c4609c2d | ||

|

|

7f69367666 | ||

|

|

fa17aa1f78 | ||

|

|

7e560b4247 | ||

|

|

9a29ea51e3 | ||

|

|

6c10713e18 | ||

|

|

b810982a17 | ||

|

|

23c95fd2ea |

11

.gitignore

vendored

11

.gitignore

vendored

@@ -1,3 +1,14 @@

|

||||

nostr_core_lib/

|

||||

nips/

|

||||

build/

|

||||

relay.log

|

||||

relay.pid

|

||||

Trash/

|

||||

src/version.h

|

||||

dev-config/

|

||||

db/

|

||||

copy_executable_local.sh

|

||||

nostr_login_lite/

|

||||

style_guide/

|

||||

nostr-tools

|

||||

|

||||

|

||||

6

.gitmodules

vendored

6

.gitmodules

vendored

@@ -1,3 +1,9 @@

|

||||

[submodule "nostr_core_lib"]

|

||||

path = nostr_core_lib

|

||||

url = https://git.laantungir.net/laantungir/nostr_core_lib.git

|

||||

[submodule "c_utils_lib"]

|

||||

path = c_utils_lib

|

||||

url = ssh://git@git.laantungir.net:2222/laantungir/c_utils_lib.git

|

||||

[submodule "text_graph"]

|

||||

path = text_graph

|

||||

url = ssh://git@git.laantungir.net:2222/laantungir/text_graph.git

|

||||

|

||||

298

.roo/architect/AGENTS.md

Normal file

298

.roo/architect/AGENTS.md

Normal file

@@ -0,0 +1,298 @@

|

||||

|

||||

# AGENTS.md - AI Agent Integration Guide for Architect Mode

|

||||

|

||||

**Project-Specific Information for AI Agents Working with C-Relay in Architect Mode**

|

||||

|

||||

## Critical Architecture Understanding

|

||||

|

||||

### System Architecture Overview

|

||||

C-Relay implements a **unique event-based configuration architecture** that fundamentally differs from traditional Nostr relays:

|

||||

|

||||

```

|

||||

┌─────────────────┐ ┌──────────────────┐ ┌─────────────────┐

|

||||

│ WebSocket │ │ Configuration │ │ Database │

|

||||

│ + HTTP │◄──►│ Event System │◄──►│ (SQLite) │

|

||||

│ (Port 8888) │ │ (Kind 33334) │ │ Schema v4 │

|

||||

└─────────────────┘ └──────────────────┘ └─────────────────┘

|

||||

│ │ │

|

||||

▼ ▼ ▼

|

||||

┌─────────────────┐ ┌──────────────────┐ ┌─────────────────┐

|

||||

│ nostr_core_lib │ │ Admin Key │ │ Event Storage │

|

||||

│ (Crypto/Sigs) │ │ Management │ │ + Subscriptions │

|

||||

└─────────────────┘ └──────────────────┘ └─────────────────┘

|

||||

```

|

||||

|

||||

### Core Architectural Principles

|

||||

|

||||

#### 1. Event-Driven Configuration

|

||||

**Design Philosophy**: Configuration as cryptographically signed events rather than files

|

||||

- **Benefits**: Auditability, remote management, tamper-evidence

|

||||

- **Trade-offs**: Complexity in configuration changes, admin key management burden

|

||||

- **Implementation**: Kind 33334 events stored in same database as relay events

|

||||

|

||||

#### 2. Identity-Based Database Naming

|

||||

**Design Philosophy**: Database file named by relay's generated public key

|

||||

- **Benefits**: Prevents database conflicts, enables multi-relay deployments

|

||||

- **Trade-offs**: Cannot predict database filename, complicates backup strategies

|

||||

- **Implementation**: `<relay_pubkey>.db` created in build/ directory

|

||||

|

||||

#### 3. Single-Binary Deployment

|

||||

**Design Philosophy**: All functionality embedded in one executable

|

||||

- **Benefits**: Simple deployment, no external dependencies to manage

|

||||

- **Trade-offs**: Larger binary size, harder to modularize

|

||||

- **Implementation**: SQL schema embedded as header file, nostr_core_lib as submodule

|

||||

|

||||

#### 4. Dual-Protocol Support

|

||||

**Design Philosophy**: WebSocket (Nostr) and HTTP (NIP-11) on same port

|

||||

- **Benefits**: Simplified port management, reduced infrastructure complexity

|

||||

- **Trade-offs**: Protocol detection overhead, libwebsockets dependency

|

||||

- **Implementation**: Request routing based on HTTP headers and upgrade requests

|

||||

|

||||

## Architectural Decision Analysis

|

||||

|

||||

### Configuration System Design

|

||||

**Traditional Approach vs C-Relay:**

|

||||

```

|

||||

Traditional: C-Relay:

|

||||

config.json → kind 33334 events

|

||||

ENV variables → cryptographically signed tags

|

||||

File watching → database polling/restart

|

||||

```

|

||||

|

||||

**Implications for Extensions:**

|

||||

- Configuration changes require event signing capabilities

|

||||

- No hot-reloading without architectural changes

|

||||

- Admin key loss = complete database reset required

|

||||

|

||||

### Database Architecture Decisions

|

||||

**Schema Design Philosophy:**

|

||||

- **Event Tags as JSON**: Separate table with JSON column instead of normalized relations

|

||||

- **Application-Level Filtering**: NIP-40 expiration handled in C, not SQL

|

||||

- **Embedded Schema**: Version 4 schema compiled into binary

|

||||

|

||||

**Scaling Considerations:**

|

||||

- SQLite suitable for small-to-medium relays (< 10k concurrent connections)

|

||||

- Single-writer limitation of SQLite affects write-heavy workloads

|

||||

- JSON tag storage optimizes for read performance over write normalization

|

||||

|

||||

### Memory Management Architecture

|

||||

**Thread Safety Model:**

|

||||

- Global subscription manager with mutex protection

|

||||

- Per-client subscription limits enforced in memory

|

||||

- WebSocket connection state managed by libwebsockets

|

||||

|

||||

**Resource Management:**

|

||||

- JSON objects use reference counting (jansson library)

|

||||

- String duplication pattern for configuration values

|

||||

- Automatic cleanup on client disconnect

|

||||

|

||||

## Architectural Extension Points

|

||||

|

||||

### Adding New Configuration Options

|

||||

**Required Changes:**

|

||||

1. Update [`default_config_event.h`](src/default_config_event.h) template

|

||||

2. Add parsing logic in [`config.c`](src/config.c) `load_config_from_database()`

|

||||

3. Add global config struct field in [`config.h`](src/config.h)

|

||||

4. Update documentation in [`docs/configuration_guide.md`](docs/configuration_guide.md)

|

||||

|

||||

### Adding New NIP Support

|

||||

**Integration Pattern:**

|

||||

1. Event validation in [`request_validator.c`](src/request_validator.c)

|

||||

2. Protocol handling in [`main.c`](src/main.c) WebSocket callback

|

||||

3. Database storage considerations in schema

|

||||

4. Add test in `tests/` directory

|

||||

|

||||

### Scaling Architecture

|

||||

**Current Limitations:**

|

||||

- Single process, no horizontal scaling

|

||||

- SQLite single-writer bottleneck

|

||||

- Memory-based subscription management

|

||||

|

||||

**Potential Extensions:**

|

||||

- Redis for subscription state sharing

|

||||

- PostgreSQL for better concurrent write performance

|

||||

- Load balancer for read scaling with multiple instances

|

||||

|

||||

## Deployment Architecture Patterns

|

||||

|

||||

### Development Deployment

|

||||

```

|

||||

Developer Machine:

|

||||

├── ./make_and_restart_relay.sh

|

||||

├── build/c_relay_x86

|

||||

├── build/<relay_pubkey>.db

|

||||

└── relay.log

|

||||

```

|

||||

|

||||

### Production SystemD Deployment

|

||||

```

|

||||

/opt/c-relay/:

|

||||

├── c_relay_x86

|

||||

├── <relay_pubkey>.db

|

||||

├── systemd service (c-relay.service)

|

||||

└── c-relay user isolation

|

||||

```

|

||||

|

||||

### Container Deployment Architecture

|

||||

```

|

||||

Container:

|

||||

├── Multi-stage build (deps + binary)

|

||||

├── Volume mount for database persistence

|

||||

├── Health checks via NIP-11 endpoint

|

||||

└── Signal handling for graceful shutdown

|

||||

```

|

||||

|

||||

### Reverse Proxy Architecture

|

||||

```

|

||||

Internet → Nginx/HAProxy → C-Relay

|

||||

├── WebSocket upgrade handling

|

||||

├── SSL termination

|

||||

└── Rate limiting

|

||||

```

|

||||

|

||||

## Security Architecture Considerations

|

||||

|

||||

### Key Management Design

|

||||

**Admin Key Security Model:**

|

||||

- Generated once, displayed once, never stored

|

||||

- Required for all configuration changes

|

||||

- Loss requires complete database reset

|

||||

|

||||

**Relay Identity Model:**

|

||||

- Separate keypair for relay identity

|

||||

- Public key used for database naming

|

||||

- Private key never exposed to clients

|

||||

|

||||

### Event Validation Pipeline

|

||||

```

|

||||

WebSocket Input → JSON Parse → Schema Validate → Signature Verify → Store

|

||||

↓ ↓ ↓

|

||||

reject reject reject success

|

||||

```

|

||||

|

||||

### Attack Surface Analysis

|

||||

**Network Attack Vectors:**

|

||||

- WebSocket connection flooding (mitigated by libwebsockets limits)

|

||||

- JSON parsing attacks (handled by jansson library bounds checking)

|

||||

- SQLite injection (prevented by prepared statements)

|

||||

|

||||

**Configuration Attack Vectors:**

|

||||

- Admin key compromise (complete relay control)

|

||||

- Event signature forgery (prevented by nostr_core_lib validation)

|

||||

- Replay attacks (event timestamp validation required)

|

||||

|

||||

## Non-Obvious Architectural Considerations

|

||||

|

||||

### Database Evolution Strategy

|

||||

**Current Limitations:**

|

||||

- Schema changes require database recreation

|

||||

- No migration system for configuration events

|

||||

- Version 4 schema embedded in binary

|

||||

|

||||

**Future Architecture Needs:**

|

||||

- Schema versioning and migration system

|

||||

- Backward compatibility for configuration events

|

||||

- Database backup/restore procedures

|

||||

|

||||

### Configuration Event Lifecycle

|

||||

**Event Flow:**

|

||||

```

|

||||

Admin Signs Event → WebSocket Submit → Validate → Store → Restart Required

|

||||

↓ ↓ ↓

|

||||

Signature Check Database Config Reload

|

||||

```

|

||||

|

||||

**Architectural Implications:**

|

||||

- No hot configuration reloading

|

||||

- Configuration changes require planned downtime

|

||||

- Event ordering matters for multiple simultaneous changes

|

||||

|

||||

### Cross-Architecture Deployment

|

||||

**Build System Architecture:**

|

||||

- Auto-detection of host architecture

|

||||

- Cross-compilation support for ARM64

|

||||

- Architecture-specific binary outputs

|

||||

|

||||

**Deployment Implications:**

|

||||

- Binary must match target architecture

|

||||

- Dependencies must be available for target architecture

|

||||

- Debug tooling architecture-specific

|

||||

|

||||

### Performance Architecture Characteristics

|

||||

**Bottlenecks:**

|

||||

1. **SQLite Write Performance**: Single writer limitation

|

||||

2. **JSON Parsing**: Per-event parsing overhead

|

||||

3. **Signature Validation**: Cryptographic operations per event

|

||||

4. **Memory Management**: JSON object lifecycle management

|

||||

|

||||

**Optimization Points:**

|

||||

- Prepared statement reuse

|

||||

- Connection pooling for concurrent reads

|

||||

- Event batching for bulk operations

|

||||

- Subscription indexing strategies

|

||||

|

||||

### Integration Architecture Patterns

|

||||

**Monitoring Integration:**

|

||||

- NIP-11 endpoint for health checks

|

||||

- Log file monitoring for operational metrics

|

||||

- Database query monitoring for performance

|

||||

- Process monitoring for resource usage

|

||||

|

||||

**Backup Architecture:**

|

||||

- Database file backup (SQLite file copy)

|

||||

- Configuration event export/import

|

||||

- Admin key secure storage (external to relay)

|

||||

|

||||

### Future Extension Architectures

|

||||

**Multi-Relay Coordination:**

|

||||

- Database sharding by event kind

|

||||

- Cross-relay event synchronization

|

||||

- Distributed configuration management

|

||||

|

||||

**Plugin Architecture Possibilities:**

|

||||

- Event processing pipeline hooks

|

||||

- Custom validation plugins

|

||||

- External authentication providers

|

||||

|

||||

**Scaling Architecture Options:**

|

||||

- Read replicas with PostgreSQL migration

|

||||

- Event stream processing with message queues

|

||||

- Microservice decomposition (auth, storage, validation)

|

||||

|

||||

## Architectural Anti-Patterns to Avoid

|

||||

|

||||

1. **Configuration File Addition**: Breaks event-based config paradigm

|

||||

2. **Direct Database Modification**: Bypasses signature validation

|

||||

3. **Hard-Coded Ports**: Conflicts with auto-fallback system

|

||||

4. **Schema Modifications**: Requires database recreation

|

||||

5. **Admin Key Storage**: Violates security model

|

||||

6. **Blocking Operations**: Interferes with WebSocket event loop

|

||||

7. **Memory Leaks**: JSON objects must be properly reference counted

|

||||

8. **Thread Unsafe Operations**: Global state requires proper synchronization

|

||||

|

||||

## Architecture Decision Records (Implicit)

|

||||

|

||||

### Decision: Event-Based Configuration

|

||||

**Context**: Traditional config files vs. cryptographic auditability

|

||||

**Decision**: Store configuration as signed Nostr events

|

||||

**Consequences**: Complex configuration changes, enhanced security, remote management capability

|

||||

|

||||

### Decision: SQLite Database

|

||||

**Context**: Database choice for relay storage

|

||||

**Decision**: Embedded SQLite with JSON tag storage

|

||||

**Consequences**: Simple deployment, single-writer limitation, application-level filtering

|

||||

|

||||

### Decision: Single Binary Deployment

|

||||

**Context**: Dependency management vs. deployment simplicity

|

||||

**Decision**: Embed all dependencies and schema in binary

|

||||

**Consequences**: Larger binary, simple deployment, version coupling

|

||||

|

||||

### Decision: Dual Protocol Support

|

||||

**Context**: WebSocket for Nostr, HTTP for NIP-11

|

||||

**Decision**: Same port serves both protocols

|

||||

**Consequences**: Simplified deployment, protocol detection overhead, libwebsockets dependency

|

||||

|

||||

These architectural decisions form the foundation of C-Relay's unique approach to Nostr relay implementation and should be carefully considered when planning extensions or modifications.

|

||||

**

|

||||

|

||||

[Response interrupted by a tool use result. Only one tool may be used at a time and should be placed at the end of the message.]

|

||||

7

.roo/commands/push.md

Normal file

7

.roo/commands/push.md

Normal file

@@ -0,0 +1,7 @@

|

||||

---

|

||||

description: "Brief description of what this command does"

|

||||

---

|

||||

|

||||

Run increment_and_push.sh, and supply a good git commit message. For example:

|

||||

|

||||

./increment_and_push.sh "Fixed the bug with nip05 implementation"

|

||||

1

.roo/rules-code/rules.md

Normal file

1

.roo/rules-code/rules.md

Normal file

@@ -0,0 +1 @@

|

||||

Use ./make_and_restart_relay.sh instead of make to build the project.

|

||||

1

.rooignore

Normal file

1

.rooignore

Normal file

@@ -0,0 +1 @@

|

||||

src/embedded_web_content.c

|

||||

152

AGENTS.md

Normal file

152

AGENTS.md

Normal file

@@ -0,0 +1,152 @@

|

||||

# AGENTS.md - AI Agent Integration Guide

|

||||

|

||||

**Project-Specific Information for AI Agents Working with C-Relay**

|

||||

|

||||

## Critical Build Commands

|

||||

|

||||

### Primary Build Command

|

||||

```bash

|

||||

./make_and_restart_relay.sh

|

||||

```

|

||||

**Never use `make` directly.** The project requires the custom restart script which:

|

||||

- Handles database preservation/cleanup based on flags

|

||||

- Manages architecture-specific binary detection (x86/ARM64)

|

||||

- Performs automatic process cleanup and port management

|

||||

- Starts relay in background with proper logging

|

||||

|

||||

### Architecture-Specific Binary Outputs

|

||||

- **x86_64**: `./build/c_relay_x86`

|

||||

- **ARM64**: `./build/c_relay_arm64`

|

||||

- **Other**: `./build/c_relay_$(ARCH)`

|

||||

|

||||

### Database File Naming Convention

|

||||

- **Format**: `<relay_pubkey>.db` (NOT `.nrdb` as shown in docs)

|

||||

- **Location**: Created in `build/` directory during execution

|

||||

- **Cleanup**: Use `--preserve-database` flag to retain between builds

|

||||

|

||||

## Critical Integration Issues

|

||||

|

||||

### Event-Based Configuration System

|

||||

- **No traditional config files** - all configuration stored in config table

|

||||

- Admin private key shown **only once** on first startup

|

||||

- Configuration changes require cryptographically signed events

|

||||

- Database path determined by generated relay pubkey

|

||||

|

||||

### First-Time Startup Sequence

|

||||

1. Relay generates admin keypair and relay keypair

|

||||

2. Creates database file with relay pubkey as filename

|

||||

3. Stores default configuration in config table

|

||||

4. **CRITICAL**: Admin private key displayed once and never stored on disk

|

||||

|

||||

### Port Management

|

||||

- Default port 8888 with automatic fallback (8889, 8890, etc.)

|

||||

- Script performs port availability checking before libwebsockets binding

|

||||

- Process cleanup includes force-killing processes on port 8888

|

||||

|

||||

### Database Schema Dependencies

|

||||

- Uses embedded SQL schema (`sql_schema.h`)

|

||||

- Schema version 4 with JSON tag storage

|

||||

- **Critical**: Event expiration filtering done at application level, not SQL level

|

||||

|

||||

### Admin API Event Structure

|

||||

```json

|

||||

{

|

||||

"kind": 23456,

|

||||

"content": "base64_nip44_encrypted_command_array",

|

||||

"tags": [

|

||||

["p", "<relay_pubkey>"]

|

||||

]

|

||||

}

|

||||

```

|

||||

|

||||

**Configuration Commands** (encrypted in content):

|

||||

- `["relay_description", "My Relay"]`

|

||||

- `["max_subscriptions_per_client", "25"]`

|

||||

- `["pow_min_difficulty", "16"]`

|

||||

|

||||

**Auth Rule Commands** (encrypted in content):

|

||||

- `["blacklist", "pubkey", "hex_pubkey_value"]`

|

||||

- `["whitelist", "pubkey", "hex_pubkey_value"]`

|

||||

|

||||

**Query Commands** (encrypted in content):

|

||||

- `["auth_query", "all"]`

|

||||

- `["system_command", "system_status"]`

|

||||

|

||||

### Process Management

|

||||

```bash

|

||||

# Kill existing relay processes

|

||||

pkill -f "c_relay_"

|

||||

|

||||

# Check running processes

|

||||

ps aux | grep c_relay_

|

||||

|

||||

# Force kill port binding

|

||||

fuser -k 8888/tcp

|

||||

```

|

||||

|

||||

### Cross-Compilation Specifics

|

||||

- ARM64 requires explicit dependency installation: `make install-arm64-deps`

|

||||

- Uses `aarch64-linux-gnu-gcc` with specific library paths

|

||||

- PKG_CONFIG_PATH must be set for ARM64: `/usr/lib/aarch64-linux-gnu/pkgconfig`

|

||||

|

||||

### Testing Integration

|

||||

- Tests expect relay running on default port

|

||||

- Use `tests/quick_error_tests.sh` for validation

|

||||

- Event configuration tests: `tests/event_config_tests.sh`

|

||||

|

||||

### SystemD Integration Considerations

|

||||

- Service runs as `c-relay` user in `/opt/c-relay`

|

||||

- Database files created in WorkingDirectory automatically

|

||||

- No environment variables needed (event-based config)

|

||||

- Resource limits: 65536 file descriptors, 4096 processes

|

||||

|

||||

### Development vs Production Differences

|

||||

- Development: `make_and_restart_relay.sh` (default database cleanup)

|

||||

- Production: `make_and_restart_relay.sh --preserve-database`

|

||||

- Debug build requires manual gdb attachment to architecture-specific binary

|

||||

|

||||

### Critical File Dependencies

|

||||

- `nostr_core_lib/` submodule must be initialized and built first

|

||||

- Version header auto-generated from git tags: `src/version.h`

|

||||

- Schema embedded in binary from `src/sql_schema.h`

|

||||

|

||||

### WebSocket Protocol Specifics

|

||||

- Supports both WebSocket (Nostr protocol) and HTTP (NIP-11)

|

||||

- NIP-11 requires `Accept: application/nostr+json` header

|

||||

- CORS headers automatically added for NIP-11 compliance

|

||||

|

||||

### Memory Management Notes

|

||||

- Persistent subscription system with thread-safe global manager

|

||||

- Per-session subscription limits enforced

|

||||

- Event filtering done at C level, not SQL level for NIP-40 expiration

|

||||

|

||||

### Configuration Override Behavior

|

||||

- CLI port override applies during first-time startup and existing relay restarts

|

||||

- After database creation, all config comes from events (but CLI overrides can still be applied)

|

||||

- Database path cannot be changed after initialization

|

||||

|

||||

## Non-Obvious Pitfalls

|

||||

|

||||

1. **Database Lock Issues**: Script handles SQLite locking by killing existing processes first

|

||||

2. **Port Race Conditions**: Pre-check + libwebsockets binding can still fail due to timing

|

||||

3. **Key Loss**: Admin private key loss requires complete database deletion and restart

|

||||

4. **Architecture Detection**: Build system auto-detects but cross-compilation requires manual setup

|

||||

5. **Event Storage**: Ephemeral events (kind 20000-29999) accepted but not stored

|

||||

6. **Signature Validation**: All events validated with `nostr_verify_event_signature()` from nostr_core_lib

|

||||

|

||||

## Quick Debugging Commands

|

||||

```bash

|

||||

# Check relay status

|

||||

ps aux | grep c_relay_ && netstat -tln | grep 8888

|

||||

|

||||

# View logs

|

||||

tail -f relay.log

|

||||

|

||||

# Test WebSocket connection

|

||||

wscat -c ws://localhost:8888

|

||||

|

||||

# Test NIP-11 endpoint

|

||||

curl -H "Accept: application/nostr+json" http://localhost:8888

|

||||

|

||||

# Find database files

|

||||

find . -name "*.db" -type f

|

||||

142

Dockerfile.alpine-musl

Normal file

142

Dockerfile.alpine-musl

Normal file

@@ -0,0 +1,142 @@

|

||||

# Alpine-based MUSL static binary builder for C-Relay

|

||||

# Produces truly portable binaries with zero runtime dependencies

|

||||

|

||||

ARG DEBUG_BUILD=false

|

||||

|

||||

FROM alpine:3.19 AS builder

|

||||

|

||||

# Re-declare build argument in this stage

|

||||

ARG DEBUG_BUILD=false

|

||||

|

||||

# Install build dependencies

|

||||

RUN apk add --no-cache \

|

||||

build-base \

|

||||

musl-dev \

|

||||

git \

|

||||

cmake \

|

||||

pkgconfig \

|

||||

autoconf \

|

||||

automake \

|

||||

libtool \

|

||||

openssl-dev \

|

||||

openssl-libs-static \

|

||||

zlib-dev \

|

||||

zlib-static \

|

||||

curl-dev \

|

||||

curl-static \

|

||||

sqlite-dev \

|

||||

sqlite-static \

|

||||

linux-headers \

|

||||

wget \

|

||||

bash

|

||||

|

||||

# Set working directory

|

||||

WORKDIR /build

|

||||

|

||||

# Build libsecp256k1 static (cached layer - only rebuilds if Alpine version changes)

|

||||

RUN cd /tmp && \

|

||||

git clone https://github.com/bitcoin-core/secp256k1.git && \

|

||||

cd secp256k1 && \

|

||||

./autogen.sh && \

|

||||

./configure --enable-static --disable-shared --prefix=/usr \

|

||||

CFLAGS="-fPIC" && \

|

||||

make -j$(nproc) && \

|

||||

make install && \

|

||||

rm -rf /tmp/secp256k1

|

||||

|

||||

# Build libwebsockets static with minimal features (cached layer)

|

||||

RUN cd /tmp && \

|

||||

git clone --depth 1 --branch v4.3.3 https://github.com/warmcat/libwebsockets.git && \

|

||||

cd libwebsockets && \

|

||||

mkdir build && cd build && \

|

||||

cmake .. \

|

||||

-DLWS_WITH_STATIC=ON \

|

||||

-DLWS_WITH_SHARED=OFF \

|

||||

-DLWS_WITH_SSL=ON \

|

||||

-DLWS_WITHOUT_TESTAPPS=ON \

|

||||

-DLWS_WITHOUT_TEST_SERVER=ON \

|

||||

-DLWS_WITHOUT_TEST_CLIENT=ON \

|

||||

-DLWS_WITHOUT_TEST_PING=ON \

|

||||

-DLWS_WITH_HTTP2=OFF \

|

||||

-DLWS_WITH_LIBUV=OFF \

|

||||

-DLWS_WITH_LIBEVENT=OFF \

|

||||

-DLWS_IPV6=ON \

|

||||

-DCMAKE_BUILD_TYPE=Release \

|

||||

-DCMAKE_INSTALL_PREFIX=/usr \

|

||||

-DCMAKE_C_FLAGS="-fPIC" && \

|

||||

make -j$(nproc) && \

|

||||

make install && \

|

||||

rm -rf /tmp/libwebsockets

|

||||

|

||||

# Copy only submodule configuration and git directory

|

||||

COPY .gitmodules /build/.gitmodules

|

||||

COPY .git /build/.git

|

||||

|

||||

# Clean up any stale submodule references (nips directory is not a submodule)

|

||||

RUN git rm --cached nips 2>/dev/null || true

|

||||

|

||||

# Initialize submodules (cached unless .gitmodules changes)

|

||||

RUN git submodule update --init --recursive

|

||||

|

||||

# Copy nostr_core_lib source files (cached unless nostr_core_lib changes)

|

||||

COPY nostr_core_lib /build/nostr_core_lib/

|

||||

|

||||

# Copy c_utils_lib source files (cached unless c_utils_lib changes)

|

||||

COPY c_utils_lib /build/c_utils_lib/

|

||||

|

||||

# Build c_utils_lib with MUSL-compatible flags (cached unless c_utils_lib changes)

|

||||

RUN cd c_utils_lib && \

|

||||

sed -i 's/CFLAGS = -Wall -Wextra -std=c99 -O2 -g/CFLAGS = -U_FORTIFY_SOURCE -D_FORTIFY_SOURCE=0 -Wall -Wextra -std=c99 -O2 -g/' Makefile && \

|

||||

make clean && \

|

||||

make

|

||||

|

||||

# Build nostr_core_lib with required NIPs (cached unless nostr_core_lib changes)

|

||||

# Disable fortification in build.sh to prevent __*_chk symbol issues

|

||||

# NIPs: 001(Basic), 006(Keys), 013(PoW), 017(DMs), 019(Bech32), 044(Encryption), 059(Gift Wrap - required by NIP-17)

|

||||

RUN cd nostr_core_lib && \

|

||||

chmod +x build.sh && \

|

||||

sed -i 's/CFLAGS="-Wall -Wextra -std=c99 -fPIC -O2"/CFLAGS="-U_FORTIFY_SOURCE -D_FORTIFY_SOURCE=0 -Wall -Wextra -std=c99 -fPIC -O2"/' build.sh && \

|

||||

rm -f *.o *.a 2>/dev/null || true && \

|

||||

./build.sh --nips=1,6,13,17,19,44,59

|

||||

|

||||

# Copy c-relay source files LAST (only this layer rebuilds on source changes)

|

||||

COPY src/ /build/src/

|

||||

COPY Makefile /build/Makefile

|

||||

|

||||

# Build c-relay with full static linking (only rebuilds when src/ changes)

|

||||

# Disable fortification to avoid __*_chk symbols that don't exist in MUSL

|

||||

# Use conditional compilation flags based on DEBUG_BUILD argument

|

||||

RUN if [ "$DEBUG_BUILD" = "true" ]; then \

|

||||

CFLAGS="-g -O0 -DDEBUG"; \

|

||||

STRIP_CMD=""; \

|

||||

echo "Building with DEBUG symbols enabled"; \

|

||||

else \

|

||||

CFLAGS="-O2"; \

|

||||

STRIP_CMD="strip /build/c_relay_static"; \

|

||||

echo "Building optimized production binary"; \

|

||||

fi && \

|

||||

gcc -static $CFLAGS -Wall -Wextra -std=c99 \

|

||||

-U_FORTIFY_SOURCE -D_FORTIFY_SOURCE=0 \

|

||||

-I. -Ic_utils_lib/src -Inostr_core_lib -Inostr_core_lib/nostr_core \

|

||||

-Inostr_core_lib/cjson -Inostr_core_lib/nostr_websocket \

|

||||

src/main.c src/config.c src/dm_admin.c src/request_validator.c \

|

||||

src/nip009.c src/nip011.c src/nip013.c src/nip040.c src/nip042.c \

|

||||

src/websockets.c src/subscriptions.c src/api.c src/embedded_web_content.c \

|

||||

-o /build/c_relay_static \

|

||||

c_utils_lib/libc_utils.a \

|

||||

nostr_core_lib/libnostr_core_x64.a \

|

||||

-lwebsockets -lssl -lcrypto -lsqlite3 -lsecp256k1 \

|

||||

-lcurl -lz -lpthread -lm -ldl && \

|

||||

eval "$STRIP_CMD"

|

||||

|

||||

# Verify it's truly static

|

||||

RUN echo "=== Binary Information ===" && \

|

||||

file /build/c_relay_static && \

|

||||

ls -lh /build/c_relay_static && \

|

||||

echo "=== Checking for dynamic dependencies ===" && \

|

||||

(ldd /build/c_relay_static 2>&1 || echo "Binary is static") && \

|

||||

echo "=== Build complete ==="

|

||||

|

||||

# Output stage - just the binary

|

||||

FROM scratch AS output

|

||||

COPY --from=builder /build/c_relay_static /c_relay_static

|

||||

96

Makefile

96

Makefile

@@ -2,15 +2,16 @@

|

||||

|

||||

CC = gcc

|

||||

CFLAGS = -Wall -Wextra -std=c99 -g -O2

|

||||

INCLUDES = -I. -Inostr_core_lib -Inostr_core_lib/nostr_core -Inostr_core_lib/cjson -Inostr_core_lib/nostr_websocket

|

||||

LIBS = -lsqlite3 -lwebsockets -lz -ldl -lpthread -lm -L/usr/local/lib -lsecp256k1 -lssl -lcrypto -L/usr/local/lib -lcurl

|

||||

INCLUDES = -I. -Ic_utils_lib/src -Inostr_core_lib -Inostr_core_lib/nostr_core -Inostr_core_lib/cjson -Inostr_core_lib/nostr_websocket

|

||||

LIBS = -lsqlite3 -lwebsockets -lz -ldl -lpthread -lm -L/usr/local/lib -lsecp256k1 -lssl -lcrypto -L/usr/local/lib -lcurl -Lc_utils_lib -lc_utils

|

||||

|

||||

# Build directory

|

||||

BUILD_DIR = build

|

||||

|

||||

# Source files

|

||||

MAIN_SRC = src/main.c

|

||||

MAIN_SRC = src/main.c src/config.c src/dm_admin.c src/request_validator.c src/nip009.c src/nip011.c src/nip013.c src/nip040.c src/nip042.c src/websockets.c src/subscriptions.c src/api.c src/embedded_web_content.c

|

||||

NOSTR_CORE_LIB = nostr_core_lib/libnostr_core_x64.a

|

||||

C_UTILS_LIB = c_utils_lib/libc_utils.a

|

||||

|

||||

# Architecture detection

|

||||

ARCH = $(shell uname -m)

|

||||

@@ -32,23 +33,66 @@ $(BUILD_DIR):

|

||||

mkdir -p $(BUILD_DIR)

|

||||

|

||||

# Check if nostr_core_lib is built

|

||||

# Explicitly specify NIPs to ensure NIP-44 (encryption) is included

|

||||

# NIPs: 1 (basic), 6 (keys), 13 (PoW), 17 (DMs), 19 (bech32), 44 (encryption), 59 (gift wrap)

|

||||

$(NOSTR_CORE_LIB):

|

||||

@echo "Building nostr_core_lib..."

|

||||

cd nostr_core_lib && ./build.sh

|

||||

@echo "Building nostr_core_lib with required NIPs (including NIP-44 for encryption)..."

|

||||

cd nostr_core_lib && ./build.sh --nips=1,6,13,17,19,44,59

|

||||

|

||||

# Check if c_utils_lib is built

|

||||

$(C_UTILS_LIB):

|

||||

@echo "Building c_utils_lib..."

|

||||

cd c_utils_lib && ./build.sh lib

|

||||

|

||||

# Update main.h version information (requires main.h to exist)

|

||||

src/main.h:

|

||||

@if [ ! -f src/main.h ]; then \

|

||||

echo "ERROR: src/main.h not found!"; \

|

||||

echo "Please ensure src/main.h exists with relay metadata."; \

|

||||

echo "Copy from a backup or create manually with proper relay configuration."; \

|

||||

exit 1; \

|

||||

fi; \

|

||||

if [ -d .git ]; then \

|

||||

echo "Updating main.h version information from git tags..."; \

|

||||

RAW_VERSION=$$(git describe --tags --always 2>/dev/null || echo "unknown"); \

|

||||

if echo "$$RAW_VERSION" | grep -q "^v[0-9]"; then \

|

||||

CLEAN_VERSION=$$(echo "$$RAW_VERSION" | sed 's/^v//' | cut -d- -f1); \

|

||||

VERSION="v$$CLEAN_VERSION"; \

|

||||

MAJOR=$$(echo "$$CLEAN_VERSION" | cut -d. -f1); \

|

||||

MINOR=$$(echo "$$CLEAN_VERSION" | cut -d. -f2); \

|

||||

PATCH=$$(echo "$$CLEAN_VERSION" | cut -d. -f3); \

|

||||

else \

|

||||

VERSION="v0.0.0"; \

|

||||

MAJOR=0; MINOR=0; PATCH=0; \

|

||||

fi; \

|

||||

echo "Updating version information in existing main.h..."; \

|

||||

sed -i "s/#define VERSION \".*\"/#define VERSION \"$$VERSION\"/g" src/main.h; \

|

||||

sed -i "s/#define VERSION_MAJOR [0-9]*/#define VERSION_MAJOR $$MAJOR/g" src/main.h; \

|

||||

sed -i "s/#define VERSION_MINOR [0-9]*/#define VERSION_MINOR $$MINOR/g" src/main.h; \

|

||||

sed -i "s/#define VERSION_PATCH [0-9]*/#define VERSION_PATCH $$PATCH/g" src/main.h; \

|

||||

echo "Updated main.h version to: $$VERSION"; \

|

||||

else \

|

||||

echo "Git not available, preserving existing main.h version information"; \

|

||||

fi

|

||||

|

||||

# Update main.h version information (requires existing main.h)

|

||||

force-version:

|

||||

@echo "Force updating main.h version information..."

|

||||

@$(MAKE) src/main.h

|

||||

|

||||

# Build the relay

|

||||

$(TARGET): $(BUILD_DIR) $(MAIN_SRC) $(NOSTR_CORE_LIB)

|

||||

$(TARGET): $(BUILD_DIR) src/main.h src/sql_schema.h $(MAIN_SRC) $(NOSTR_CORE_LIB) $(C_UTILS_LIB)

|

||||

@echo "Compiling C-Relay for architecture: $(ARCH)"

|

||||

$(CC) $(CFLAGS) $(INCLUDES) $(MAIN_SRC) -o $(TARGET) $(NOSTR_CORE_LIB) $(LIBS)

|

||||

$(CC) $(CFLAGS) $(INCLUDES) $(MAIN_SRC) -o $(TARGET) $(NOSTR_CORE_LIB) $(C_UTILS_LIB) $(LIBS)

|

||||

@echo "Build complete: $(TARGET)"

|

||||

|

||||

# Build for specific architectures

|

||||

x86: $(BUILD_DIR) $(MAIN_SRC) $(NOSTR_CORE_LIB)

|

||||

x86: $(BUILD_DIR) src/main.h src/sql_schema.h $(MAIN_SRC) $(NOSTR_CORE_LIB) $(C_UTILS_LIB)

|

||||

@echo "Building C-Relay for x86_64..."

|

||||

$(CC) $(CFLAGS) $(INCLUDES) $(MAIN_SRC) -o $(BUILD_DIR)/c_relay_x86 $(NOSTR_CORE_LIB) $(LIBS)

|

||||

$(CC) $(CFLAGS) $(INCLUDES) $(MAIN_SRC) -o $(BUILD_DIR)/c_relay_x86 $(NOSTR_CORE_LIB) $(C_UTILS_LIB) $(LIBS)

|

||||

@echo "Build complete: $(BUILD_DIR)/c_relay_x86"

|

||||

|

||||

arm64: $(BUILD_DIR) $(MAIN_SRC) $(NOSTR_CORE_LIB)

|

||||

arm64: $(BUILD_DIR) src/main.h src/sql_schema.h $(MAIN_SRC) $(NOSTR_CORE_LIB) $(C_UTILS_LIB)

|

||||

@echo "Cross-compiling C-Relay for ARM64..."

|

||||

@if ! command -v aarch64-linux-gnu-gcc >/dev/null 2>&1; then \

|

||||

echo "ERROR: ARM64 cross-compiler not found."; \

|

||||

@@ -72,7 +116,7 @@ arm64: $(BUILD_DIR) $(MAIN_SRC) $(NOSTR_CORE_LIB)

|

||||

fi

|

||||

@echo "Using aarch64-linux-gnu-gcc with ARM64 libraries..."

|

||||

PKG_CONFIG_PATH=/usr/lib/aarch64-linux-gnu/pkgconfig:/usr/share/pkgconfig \

|

||||

aarch64-linux-gnu-gcc $(CFLAGS) $(INCLUDES) $(MAIN_SRC) -o $(BUILD_DIR)/c_relay_arm64 $(NOSTR_CORE_LIB) \

|

||||

aarch64-linux-gnu-gcc $(CFLAGS) $(INCLUDES) $(MAIN_SRC) -o $(BUILD_DIR)/c_relay_arm64 $(NOSTR_CORE_LIB) $(C_UTILS_LIB) \

|

||||

-L/usr/lib/aarch64-linux-gnu $(LIBS)

|

||||

@echo "Build complete: $(BUILD_DIR)/c_relay_arm64"

|

||||

|

||||

@@ -112,19 +156,21 @@ test: $(TARGET)

|

||||

@echo "Running tests..."

|

||||

./tests/1_nip_test.sh

|

||||

|

||||

# Initialize database

|

||||

# Initialize database (now handled automatically when server starts)

|

||||

init-db:

|

||||

@echo "Initializing database..."

|

||||

./db/init.sh --force

|

||||

@echo "Database initialization is now handled automatically when the server starts."

|

||||

@echo "The schema is embedded in the binary - no external files needed."

|

||||

@echo "To manually recreate database: rm -f db/c_nostr_relay.db && ./build/c_relay_x86"

|

||||

|

||||

# Clean build artifacts

|

||||

clean:

|

||||

rm -rf $(BUILD_DIR)

|

||||

@echo "Clean complete"

|

||||

|

||||

# Clean everything including nostr_core_lib

|

||||

# Clean everything including nostr_core_lib and c_utils_lib

|

||||

clean-all: clean

|

||||

cd nostr_core_lib && make clean 2>/dev/null || true

|

||||

cd c_utils_lib && make clean 2>/dev/null || true

|

||||

|

||||

# Install dependencies (Ubuntu/Debian)

|

||||

install-deps:

|

||||

@@ -158,5 +204,23 @@ help:

|

||||

@echo " make check-toolchain # Check what compilers are available"

|

||||

@echo " make test # Run tests"

|

||||

@echo " make init-db # Set up database"

|

||||

@echo " make force-version # Force regenerate main.h from git"

|

||||

|

||||

.PHONY: all x86 arm64 test init-db clean clean-all install-deps install-cross-tools install-arm64-deps check-toolchain help

|

||||

# Build fully static MUSL binaries using Docker

|

||||

static-musl-x86_64:

|

||||

@echo "Building fully static MUSL binary for x86_64..."

|

||||

docker buildx build --platform linux/amd64 -f examples/deployment/static-builder.Dockerfile -t c-relay-static-builder-x86_64 --load .

|

||||

docker run --rm -v $(PWD)/build:/output c-relay-static-builder-x86_64 sh -c "cp /c_relay_static_musl_x86_64 /output/"

|

||||

@echo "Static binary created: build/c_relay_static_musl_x86_64"

|

||||

|

||||

static-musl-arm64:

|

||||

@echo "Building fully static MUSL binary for ARM64..."

|

||||

docker buildx build --platform linux/arm64 -f examples/deployment/static-builder.Dockerfile -t c-relay-static-builder-arm64 --load .

|

||||

docker run --rm -v $(PWD)/build:/output c-relay-static-builder-arm64 sh -c "cp /c_relay_static_musl_x86_64 /output/c_relay_static_musl_arm64"

|

||||

@echo "Static binary created: build/c_relay_static_musl_arm64"

|

||||

|

||||

static-musl: static-musl-x86_64 static-musl-arm64

|

||||

@echo "Built static MUSL binaries for both architectures"

|

||||

|

||||

.PHONY: static-musl-x86_64 static-musl-arm64 static-musl

|

||||

.PHONY: all x86 arm64 test init-db clean clean-all install-deps install-cross-tools install-arm64-deps check-toolchain help force-version

|

||||

65

NOSTR_RELEASE.md

Normal file

65

NOSTR_RELEASE.md

Normal file

@@ -0,0 +1,65 @@

|

||||

# Relay

|

||||

|

||||

I am releasing the code for the nostr relay that I wrote use myself. The code is free for anyone to use in any way that they wish.

|

||||

|

||||

Some of the features of this relay are conventional, and some are unconventional.

|

||||

|

||||

## The conventional

|

||||

|

||||

This relay is written in C99 with a sqlite database.

|

||||

|

||||

It implements the following NIPs.

|

||||

|

||||

- [x] NIP-01: Basic protocol flow implementation

|

||||

- [x] NIP-09: Event deletion

|

||||

- [x] NIP-11: Relay information document

|

||||

- [x] NIP-13: Proof of Work

|

||||

- [x] NIP-15: End of Stored Events Notice

|

||||

- [x] NIP-20: Command Results

|

||||

- [x] NIP-33: Parameterized Replaceable Events

|

||||

- [x] NIP-40: Expiration Timestamp

|

||||

- [x] NIP-42: Authentication of clients to relays

|

||||

- [x] NIP-45: Counting results

|

||||

- [x] NIP-50: Keywords filter

|

||||

- [x] NIP-70: Protected Events

|

||||

|

||||

## The unconventional

|

||||

|

||||

### The binaries are fully self contained.

|

||||

|

||||

It should just run in linux without having to worry about what you have on your system. I want to download and run. No docker. No dependency hell.

|

||||

|

||||

I'm not bothering with other operating systems.

|

||||

|

||||

### The relay is a full nostr citizen with it's own public and private keys.

|

||||

|

||||

For example, you can see my relay's profile (wss://relay.laantungir.net) running here:

|

||||

|

||||

[Primal link](https://primal.net/p/nprofile1qqswn2jsmm8lq8evas0v9vhqkdpn9nuujt90mtz60nqgsxndy66es4qjjnhr7)

|

||||

|

||||

What this means in practice is that when you start the relay, it generates keys for itself, and for it's administrator (You can specify these if you wish)

|

||||

|

||||

Now the program and the administrator can have verifed communication between the two, simply by using nostr events. For example, the administrator can send DMs to the relay, asking it's status, and changing it's configuration through any client that can handle nip17 DMs. The relay can also send notifications to the administrator about it's current status, or it can publish it's status on a regular schedule directly to NOSTR as kind-1 notes.

|

||||

|

||||

Also included is a more standard administrative web front end. This front end communicates to the relay using an extensive api, which again is nostr events signed by the administrator. You can completely control the relay through signed nostr events.

|

||||

|

||||

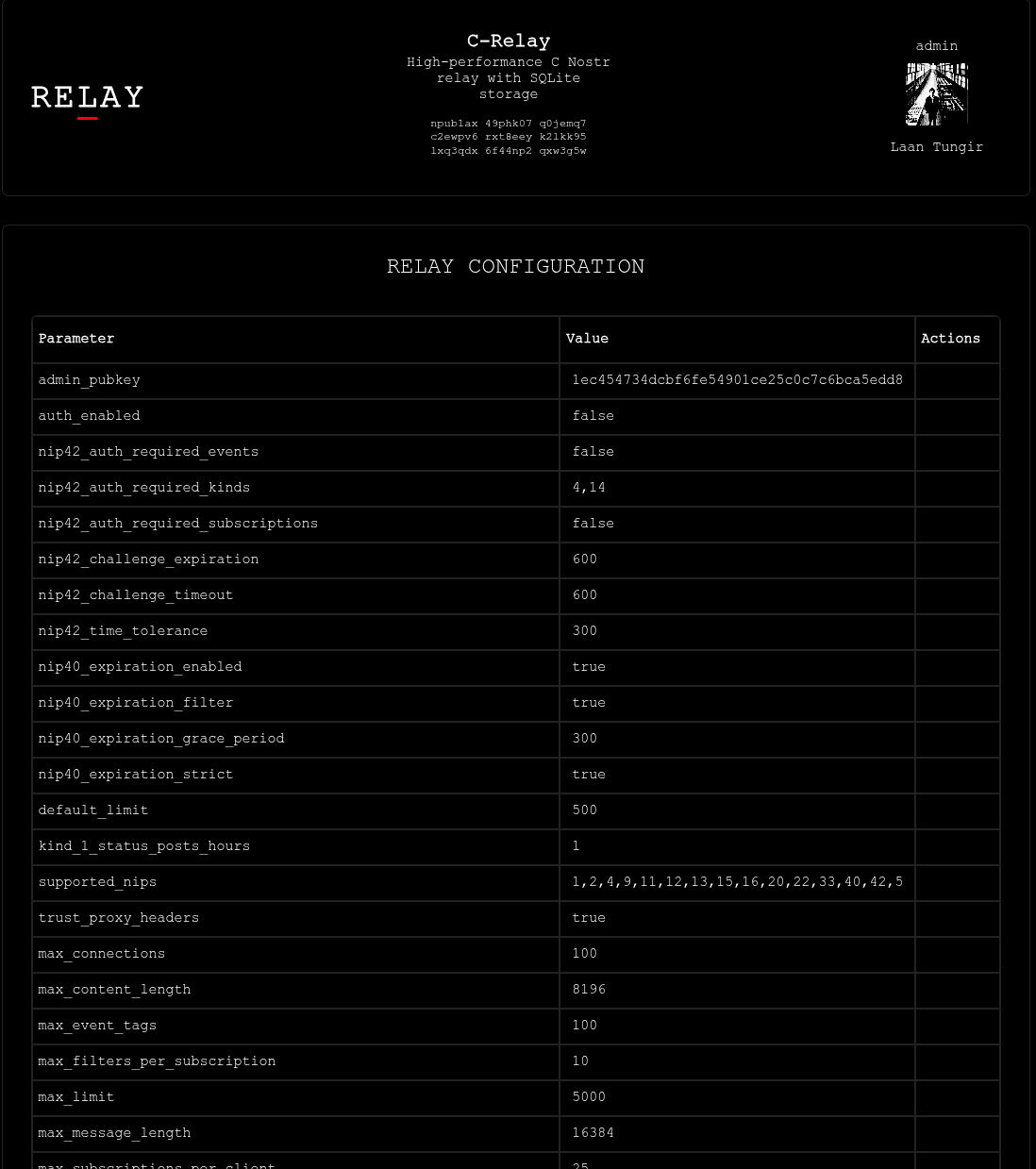

## Screenshots

|

||||

|

||||

|

||||

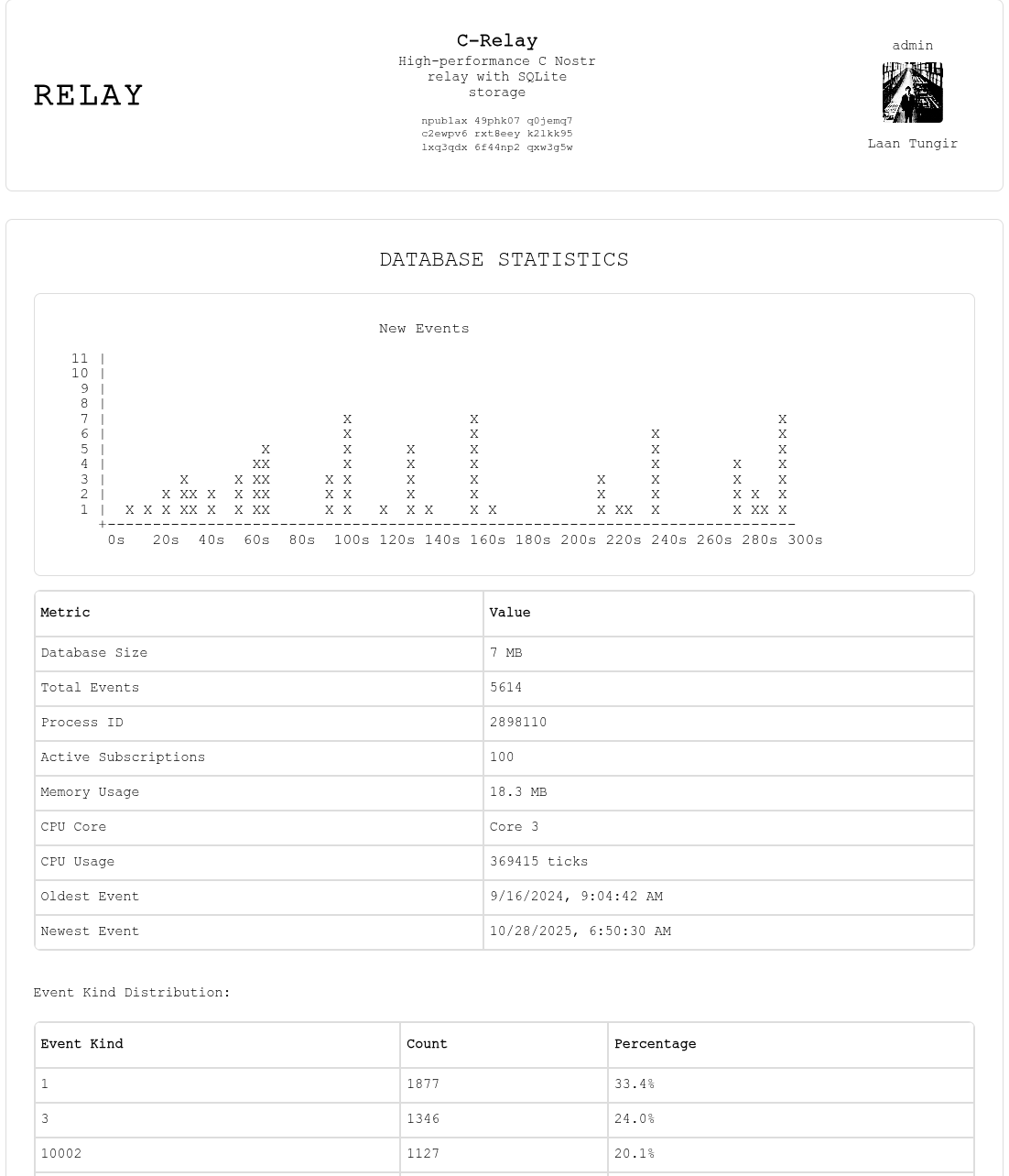

Main page with real time updates.

|

||||

|

||||

|

||||

Set your configuration preferences.

|

||||

|

||||

|

||||

View current subscriptions

|

||||

|

||||

|

||||

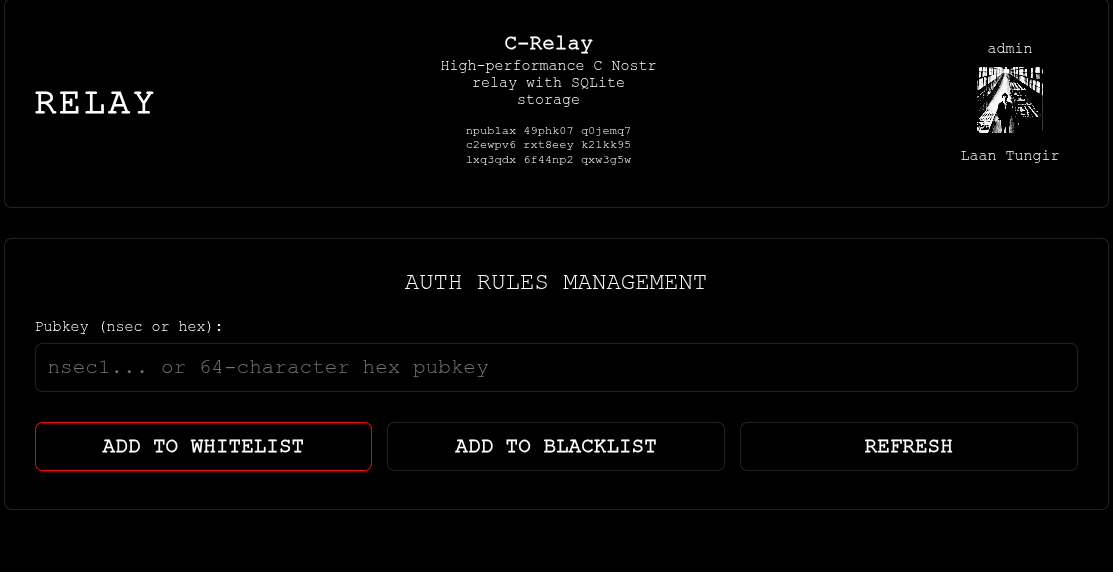

Add npubs to white or black lists.

|

||||

|

||||

|

||||

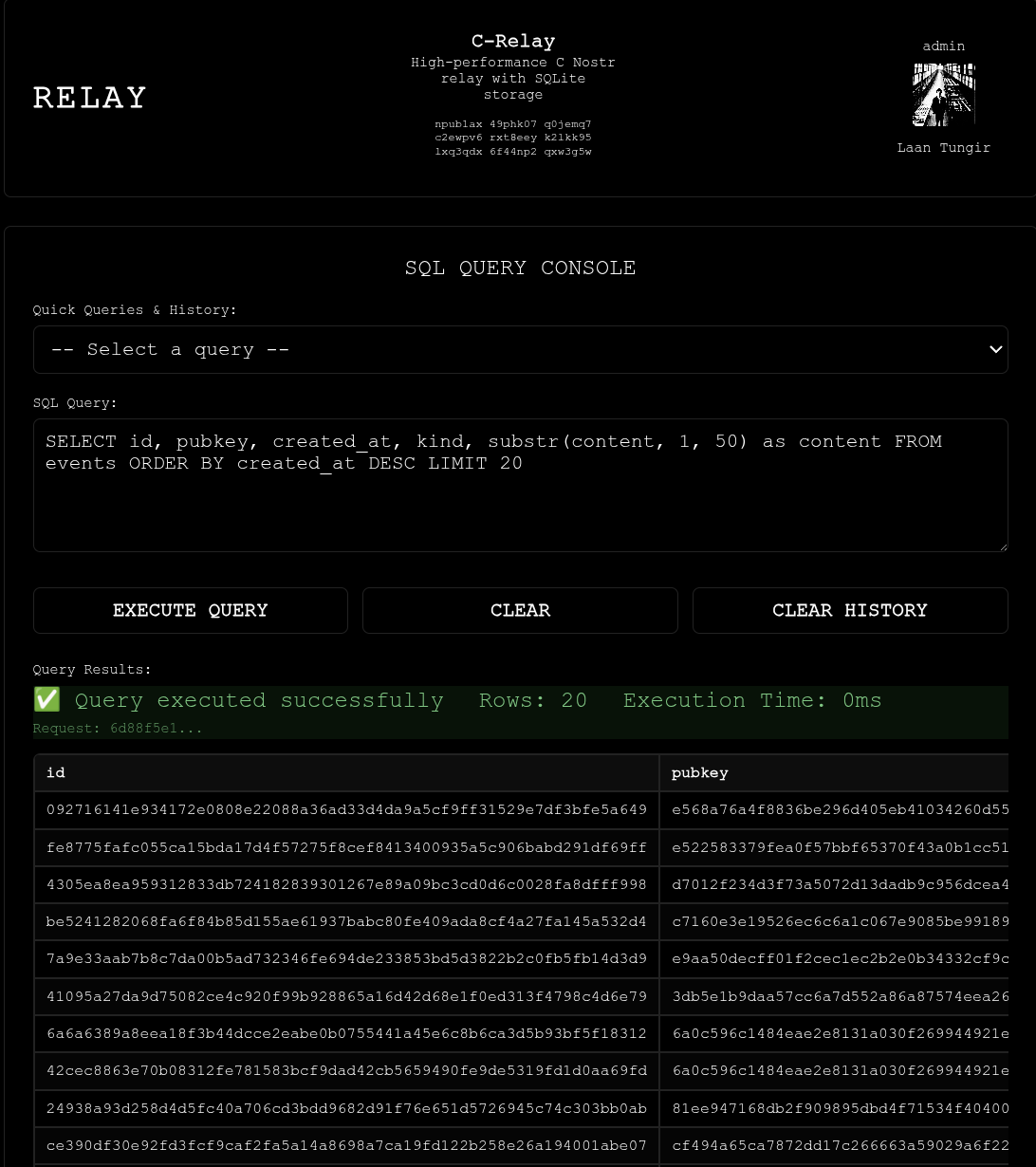

Run sql queries on the database.

|

||||

|

||||

|

||||

Light mode.

|

||||

|

||||

481

README.md

481

README.md

@@ -1,29 +1,474 @@

|

||||

A nostr relay in C with sqlite on the back end.

|

||||

# C-Nostr Relay

|

||||

|

||||

A high-performance Nostr relay implemented in C with SQLite backend, featuring nostr event-based management.

|

||||

|

||||

## Supported NIPs

|

||||

|

||||

<!--

|

||||

NOTE FOR ASSISTANTS: When updating the NIPs checklist below, ONLY change [ ] to [x] to mark as complete.

|

||||

Do NOT modify the formatting, add emojis, or change the text. Keep the simple format consistent.

|

||||

-->

|

||||

|

||||

|

||||

### [NIPs](https://github.com/nostr-protocol/nips)

|

||||

|

||||

- [x] NIP-01: Basic protocol flow implementation

|

||||

- [x] NIP-09: Event deletion

|

||||

- [ ] NIP-11: Relay information document

|

||||

- [ ] NIP-12: Generic tag queries

|

||||

- [ ] NIP-13: Proof of Work

|

||||

- [x] NIP-11: Relay information document

|

||||

- [x] NIP-13: Proof of Work

|

||||

- [x] NIP-15: End of Stored Events Notice

|

||||

- [ ] NIP-16: Event Treatment

|

||||

- [x] NIP-20: Command Results

|

||||

- [ ] NIP-22: Event `created_at` Limits

|

||||

- [ ] NIP-25: Reactions

|

||||

- [ ] NIP-26: Delegated Event Signing

|

||||

- [ ] NIP-28: Public Chat

|

||||

- [ ] NIP-33: Parameterized Replaceable Events

|

||||

- [ ] NIP-40: Expiration Timestamp

|

||||

- [ ] NIP-42: Authentication of clients to relays

|

||||

- [ ] NIP-45: Counting results. [experimental](#count)

|

||||

- [ ] NIP-50: Keywords filter. [experimental](#search)

|

||||

- [ ] NIP-70: Protected Events

|

||||

- [x] NIP-33: Parameterized Replaceable Events

|

||||

- [x] NIP-40: Expiration Timestamp

|

||||

- [x] NIP-42: Authentication of clients to relays

|

||||

- [x] NIP-45: Counting results

|

||||

- [x] NIP-50: Keywords filter

|

||||

- [x] NIP-70: Protected Events

|

||||

|

||||

## Quick Start

|

||||

|

||||

Get your C-Relay up and running in minutes with a static binary (no dependencies required):

|

||||

|

||||

### 1. Download Static Binary

|

||||

|

||||

Download the latest static release from the [releases page](https://git.laantungir.net/laantungir/c-relay/releases):

|

||||

|

||||

```bash

|

||||

# Static binary - works on all Linux distributions (no dependencies)

|

||||

wget https://git.laantungir.net/laantungir/c-relay/releases/download/v0.6.0/c-relay-v0.6.0-linux-x86_64-static

|

||||

chmod +x c-relay-v0.6.0-linux-x86_64-static

|

||||

mv c-relay-v0.6.0-linux-x86_64-static c-relay

|

||||

```

|

||||

|

||||

### 2. Start the Relay

|

||||

|

||||

Simply run the binary - no configuration files needed:

|

||||

|

||||

```bash

|

||||

./c-relay

|

||||

```

|

||||

|

||||

On first startup, you'll see:

|

||||

- **Admin Private Key**: Save this securely! You'll need it for administration

|

||||

- **Relay Public Key**: Your relay's identity on the Nostr network

|

||||

- **Port Information**: Default is 8888, or the next available port

|

||||

|

||||

### 3. Access the Web Interface

|

||||

|

||||

Open your browser and navigate to:

|

||||

```

|

||||

http://localhost:8888/api/

|

||||

```

|

||||

|

||||

The web interface provides:

|

||||

- Real-time configuration management

|

||||

- Database statistics dashboard

|

||||

- Auth rules management

|

||||

- Secure admin authentication with your Nostr identity

|

||||

|

||||

### 4. Test Your Relay

|

||||

|

||||

Test basic connectivity:

|

||||

```bash

|

||||

# Test WebSocket connection

|

||||

curl -H "Accept: application/nostr+json" http://localhost:8888

|

||||

|

||||

# Test with a Nostr client

|

||||

# Add ws://localhost:8888 to your client's relay list

|

||||

```

|

||||

|

||||

### 5. Configure Your Relay (Optional)

|

||||

|

||||

Use the web interface or send admin commands to customize:

|

||||

- Relay name and description

|

||||

- Authentication rules (whitelist/blacklist)

|

||||

- Connection limits

|

||||

- Proof-of-work requirements

|

||||

|

||||

**That's it!** Your relay is now running with zero configuration required. The event-based configuration system means you can adjust all settings through the web interface or admin API without editing config files.

|

||||

|

||||

|

||||

## Web Admin Interface

|

||||

|

||||

C-Relay includes a **built-in web-based administration interface** accessible at `http://localhost:8888/api/`. The interface provides:

|

||||

|

||||

- **Real-time Configuration Management**: View and edit all relay settings through a web UI

|

||||

- **Database Statistics Dashboard**: Monitor event counts, storage usage, and performance metrics

|

||||

- **Auth Rules Management**: Configure whitelist/blacklist rules for pubkeys

|

||||

- **NIP-42 Authentication**: Secure access using your Nostr identity

|

||||

- **Event-Based Updates**: All changes are applied as cryptographically signed Nostr events

|

||||

|

||||

The web interface serves embedded static files with no external dependencies and includes proper CORS headers for browser compatibility.

|

||||

|

||||

|

||||

## Administrator API

|

||||

|

||||

C-Relay uses an innovative **event-based administration system** where all configuration and management commands are sent as signed Nostr events using the admin private key generated during first startup. All admin commands use **NIP-44 encrypted command arrays** for security and compatibility.

|

||||

|

||||

### Authentication

|

||||

|

||||

All admin commands require signing with the admin private key displayed during first-time startup. **Save this key securely** - it cannot be recovered and is needed for all administrative operations.

|

||||

|

||||

### Event Structure

|

||||

|

||||

All admin commands use the same unified event structure with NIP-44 encrypted content:

|

||||

|

||||

**Admin Command Event:**

|

||||

```json

|

||||

{

|

||||

"id": "event_id",

|

||||

"pubkey": "admin_public_key",

|

||||

"created_at": 1234567890,

|

||||

"kind": 23456,

|

||||

"content": "AqHBUgcM7dXFYLQuDVzGwMST1G8jtWYyVvYxXhVGEu4nAb4LVw...",

|

||||

"tags": [

|

||||

["p", "relay_public_key"]

|

||||

],

|

||||

"sig": "event_signature"

|

||||

}

|

||||

```

|

||||

|

||||

The `content` field contains a NIP-44 encrypted JSON array representing the command.

|

||||

|

||||

**Admin Response Event:**

|

||||

```json

|

||||

["EVENT", "temp_sub_id", {

|

||||

"id": "response_event_id",

|

||||

"pubkey": "relay_public_key",

|

||||

"created_at": 1234567890,

|

||||

"kind": 23457,

|

||||

"content": "BpKCVhfN8eYtRmPqSvWxZnMkL2gHjUiOp3rTyEwQaS5dFg...",

|

||||

"tags": [

|

||||

["p", "admin_public_key"]

|

||||

],

|

||||

"sig": "response_event_signature"

|

||||

}]

|

||||

```

|

||||

|

||||

The `content` field contains a NIP-44 encrypted JSON response object.

|

||||

|

||||

### Admin Commands

|

||||

|

||||

All commands are sent as NIP-44 encrypted JSON arrays in the event content. The following table lists all available commands:

|

||||

|

||||

| Command Type | Command Format | Description |

|

||||

|--------------|----------------|-------------|

|

||||

| **Configuration Management** |

|

||||

| `config_update` | `["config_update", [{"key": "auth_enabled", "value": "true", "data_type": "boolean", "category": "auth"}, {"key": "relay_description", "value": "My Relay", "data_type": "string", "category": "relay"}, ...]]` | Update relay configuration parameters (supports multiple updates) |

|

||||

| `config_query` | `["config_query", "all"]` | Query all configuration parameters |

|

||||

| **Auth Rules Management** |

|

||||

| `auth_add_blacklist` | `["blacklist", "pubkey", "abc123..."]` | Add pubkey to blacklist |

|

||||

| `auth_add_whitelist` | `["whitelist", "pubkey", "def456..."]` | Add pubkey to whitelist |

|

||||

| `auth_delete_rule` | `["delete_auth_rule", "blacklist", "pubkey", "abc123..."]` | Delete specific auth rule |

|

||||

| `auth_query_all` | `["auth_query", "all"]` | Query all auth rules |

|

||||

| `auth_query_type` | `["auth_query", "whitelist"]` | Query specific rule type |

|

||||

| `auth_query_pattern` | `["auth_query", "pattern", "abc123..."]` | Query specific pattern |

|

||||

| **System Commands** |

|

||||

| `system_clear_auth` | `["system_command", "clear_all_auth_rules"]` | Clear all auth rules |

|

||||

| `system_status` | `["system_command", "system_status"]` | Get system status |

|

||||

| `stats_query` | `["stats_query"]` | Get comprehensive database statistics |

|

||||

| **Database Queries** |

|

||||

| `sql_query` | `["sql_query", "SELECT * FROM events LIMIT 10"]` | Execute read-only SQL query against relay database |

|

||||

|

||||

### Available Configuration Keys

|

||||

|

||||

**Basic Relay Settings:**

|

||||

- `relay_name`: Relay name (displayed in NIP-11)

|

||||

- `relay_description`: Relay description text

|

||||

- `relay_contact`: Contact information

|

||||

- `relay_software`: Software URL

|

||||

- `relay_version`: Software version

|

||||

- `supported_nips`: Comma-separated list of supported NIP numbers (e.g., "1,2,4,9,11,12,13,15,16,20,22,33,40,42")

|

||||

- `language_tags`: Comma-separated list of supported language tags (e.g., "en,es,fr" or "*" for all)

|

||||

- `relay_countries`: Comma-separated list of supported country codes (e.g., "US,CA,MX" or "*" for all)

|

||||

- `posting_policy`: Posting policy URL or text

|

||||

- `payments_url`: Payment URL for premium features

|

||||

- `max_connections`: Maximum concurrent connections

|

||||

- `max_subscriptions_per_client`: Max subscriptions per client

|

||||

- `max_event_tags`: Maximum tags per event

|

||||

- `max_content_length`: Maximum event content length

|

||||

|

||||

**Authentication & Access Control:**

|

||||

- `auth_enabled`: Enable whitelist/blacklist auth rules (`true`/`false`)

|

||||

- `nip42_auth_required`: Enable NIP-42 cryptographic authentication (`true`/`false`)

|

||||

- `nip42_auth_required_kinds`: Event kinds requiring NIP-42 auth (comma-separated)

|

||||

- `nip42_challenge_timeout`: NIP-42 challenge expiration seconds

|

||||

|

||||

**Proof of Work & Validation:**

|

||||

- `pow_min_difficulty`: Minimum proof-of-work difficulty

|

||||

- `nip40_expiration_enabled`: Enable event expiration (`true`/`false`)

|

||||

|

||||

**Monitoring Settings:**

|

||||

- `kind_24567_reporting_throttle_sec`: Minimum seconds between monitoring events (default: 5)

|

||||

|

||||

### Dynamic Configuration Updates

|

||||

|

||||

C-Relay supports **dynamic configuration updates** without requiring a restart for most settings. Configuration parameters are categorized as either **dynamic** (can be updated immediately) or **restart-required** (require relay restart to take effect).

|

||||

|

||||

**Dynamic Configuration Parameters (No Restart Required):**

|

||||

- All relay information (NIP-11) settings: `relay_name`, `relay_description`, `relay_contact`, `relay_software`, `relay_version`, `supported_nips`, `language_tags`, `relay_countries`, `posting_policy`, `payments_url`

|

||||

- Authentication settings: `auth_enabled`, `nip42_auth_required`, `nip42_auth_required_kinds`, `nip42_challenge_timeout`

|

||||

- Subscription limits: `max_subscriptions_per_client`, `max_total_subscriptions`

|

||||

- Event validation limits: `max_event_tags`, `max_content_length`, `max_message_length`

|

||||

- Proof of Work settings: `pow_min_difficulty`, `pow_mode`

|

||||

- Event expiration settings: `nip40_expiration_enabled`, `nip40_expiration_strict`, `nip40_expiration_filter`, `nip40_expiration_grace_period`

|

||||

|

||||

**Restart-Required Configuration Parameters:**

|

||||

- Connection settings: `max_connections`, `relay_port`

|

||||

- Database and core system settings

|

||||

|

||||

When updating configuration, the admin API response will indicate whether a restart is required for each parameter. Dynamic updates take effect immediately and are reflected in NIP-11 relay information documents without restart.

|

||||

|

||||

### Response Format

|

||||

|

||||

All admin commands return **signed EVENT responses** via WebSocket following standard Nostr protocol. Responses use JSON content with structured data.

|

||||

|

||||

#### Response Examples

|

||||

|

||||

**Success Response:**

|

||||

```json

|

||||

["EVENT", "temp_sub_id", {

|

||||

"id": "response_event_id",

|

||||

"pubkey": "relay_public_key",

|

||||

"created_at": 1234567890,

|

||||

"kind": 23457,

|

||||

"content": "nip44 encrypted:{\"query_type\": \"config_update\", \"status\": \"success\", \"message\": \"Operation completed successfully\", \"timestamp\": 1234567890}",

|

||||

"tags": [

|

||||

["p", "admin_public_key"]

|

||||

],

|

||||

"sig": "response_event_signature"

|

||||

}]

|

||||

```

|

||||

|

||||

**Error Response:**

|

||||

```json

|

||||

["EVENT", "temp_sub_id", {

|

||||

"id": "response_event_id",

|

||||

"pubkey": "relay_public_key",

|

||||

"created_at": 1234567890,

|

||||

"kind": 23457,

|

||||

"content": "nip44 encrypted:{\"query_type\": \"config_update\", \"status\": \"error\", \"error\": \"invalid configuration value\", \"timestamp\": 1234567890}",

|

||||

"tags": [

|

||||

["p", "admin_public_key"]

|

||||

],

|

||||

"sig": "response_event_signature"

|

||||

}]

|

||||

```

|

||||

|

||||

**Auth Rules Query Response:**

|

||||

```json

|

||||

["EVENT", "temp_sub_id", {

|

||||

"id": "response_event_id",

|

||||

"pubkey": "relay_public_key",

|

||||

"created_at": 1234567890,

|

||||

"kind": 23457,

|

||||

"content": "nip44 encrypted:{\"query_type\": \"auth_rules_all\", \"total_results\": 2, \"timestamp\": 1234567890, \"data\": [{\"rule_type\": \"blacklist\", \"pattern_type\": \"pubkey\", \"pattern_value\": \"abc123...\", \"action\": \"allow\"}]}",

|

||||

"tags": [

|

||||

["p", "admin_public_key"]

|

||||

],

|

||||

"sig": "response_event_signature"

|

||||

}]

|

||||

```

|

||||

|

||||

**Configuration Query Response:**

|

||||

```json

|

||||

["EVENT", "temp_sub_id", {

|

||||

"id": "response_event_id",

|

||||

"pubkey": "relay_public_key",

|

||||

"created_at": 1234567890,

|

||||

"kind": 23457,

|

||||

"content": "nip44 encrypted:{\"query_type\": \"config_all\", \"total_results\": 27, \"timestamp\": 1234567890, \"data\": [{\"key\": \"auth_enabled\", \"value\": \"false\", \"data_type\": \"boolean\", \"category\": \"auth\", \"description\": \"Enable NIP-42 authentication\"}, {\"key\": \"relay_description\", \"value\": \"My Relay\", \"data_type\": \"string\", \"category\": \"relay\", \"description\": \"Relay description text\"}]}",

|

||||

"tags": [

|

||||

["p", "admin_public_key"]

|

||||

],

|

||||

"sig": "response_event_signature"

|

||||

}]

|

||||

```

|

||||

|

||||

**Configuration Update Success Response:**

|

||||

```json

|

||||

["EVENT", "temp_sub_id", {

|

||||

"id": "response_event_id",

|

||||

"pubkey": "relay_public_key",

|

||||

"created_at": 1234567890,

|

||||

"kind": 23457,

|

||||

"content": "nip44 encrypted:{\"query_type\": \"config_update\", \"total_results\": 2, \"timestamp\": 1234567890, \"status\": \"success\", \"data\": [{\"key\": \"auth_enabled\", \"value\": \"true\", \"status\": \"updated\"}, {\"key\": \"relay_description\", \"value\": \"My Updated Relay\", \"status\": \"updated\"}]}",

|