Compare commits

45 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

f272264960 | ||

|

|

cb3171b390 | ||

|

|

03f036d60d | ||

|

|

9b35f463ae | ||

|

|

7b74486519 | ||

|

|

b276b44ded | ||

|

|

3792649ed9 | ||

|

|

5f08956605 | ||

|

|

643d89ed7b | ||

|

|

8ca459593c | ||

|

|

ee4208cc19 | ||

|

|

f6330e4bb8 | ||

|

|

4f3cf10a5c | ||

|

|

aa1954e81e | ||

|

|

482597bd0e | ||

|

|

d4b90e681c | ||

|

|

fcf9e43c4c | ||

|

|

b8d8cd19d3 | ||

|

|

536c2d966c | ||

|

|

f49cb0a5ac | ||

|

|

cef6bb2340 | ||

|

|

4c03253b30 | ||

|

|

ed09bb7370 | ||

|

|

5c46a25173 | ||

|

|

d1538f00df | ||

|

|

afa4acbbfb | ||

|

|

d9a530485f | ||

|

|

b2ad70b028 | ||

|

|

f49aae8ab0 | ||

|

|

f6debcf799 | ||

|

|

edbc4f1359 | ||

|

|

5242f066e7 | ||

|

|

af186800fa | ||

|

|

2bff4a5f44 | ||

|

|

edb73d50cf | ||

|

|

3dc09d55fd | ||

|

|

079fb1b0f5 | ||

|

|

17b2aa8111 | ||

|

|

78d484cfe0 | ||

|

|

182e12817d | ||

|

|

9179d57cc9 | ||

|

|

9cb9b746d8 | ||

|

|

57a0089664 | ||

|

|

53f7608872 | ||

|

|

838ce5b45a |

1

.gitignore

vendored

@@ -11,4 +11,3 @@ copy_executable_local.sh

|

||||

nostr_login_lite/

|

||||

style_guide/

|

||||

nostr-tools

|

||||

|

||||

|

||||

@@ -121,8 +121,8 @@ fuser -k 8888/tcp

|

||||

- Event filtering done at C level, not SQL level for NIP-40 expiration

|

||||

|

||||

### Configuration Override Behavior

|

||||

- CLI port override only affects first-time startup

|

||||

- After database creation, all config comes from events

|

||||

- CLI port override applies during first-time startup and existing relay restarts

|

||||

- After database creation, all config comes from events (but CLI overrides can still be applied)

|

||||

- Database path cannot be changed after initialization

|

||||

|

||||

## Non-Obvious Pitfalls

|

||||

|

||||

@@ -5,6 +5,9 @@ ARG DEBUG_BUILD=false

|

||||

|

||||

FROM alpine:3.19 AS builder

|

||||

|

||||

# Re-declare build argument in this stage

|

||||

ARG DEBUG_BUILD=false

|

||||

|

||||

# Install build dependencies

|

||||

RUN apk add --no-cache \

|

||||

build-base \

|

||||

|

||||

65

NOSTR_RELEASE.md

Normal file

@@ -0,0 +1,65 @@

|

||||

# Relay

|

||||

|

||||

I am releasing the code for the nostr relay that I wrote use myself. The code is free for anyone to use in any way that they wish.

|

||||

|

||||

Some of the features of this relay are conventional, and some are unconventional.

|

||||

|

||||

## The conventional

|

||||

|

||||

This relay is written in C99 with a sqlite database.

|

||||

|

||||

It implements the following NIPs.

|

||||

|

||||

- [x] NIP-01: Basic protocol flow implementation

|

||||

- [x] NIP-09: Event deletion

|

||||

- [x] NIP-11: Relay information document

|

||||

- [x] NIP-13: Proof of Work

|

||||

- [x] NIP-15: End of Stored Events Notice

|

||||

- [x] NIP-20: Command Results

|

||||

- [x] NIP-33: Parameterized Replaceable Events

|

||||

- [x] NIP-40: Expiration Timestamp

|

||||

- [x] NIP-42: Authentication of clients to relays

|

||||

- [x] NIP-45: Counting results

|

||||

- [x] NIP-50: Keywords filter

|

||||

- [x] NIP-70: Protected Events

|

||||

|

||||

## The unconventional

|

||||

|

||||

### The binaries are fully self contained.

|

||||

|

||||

It should just run in linux without having to worry about what you have on your system. I want to download and run. No docker. No dependency hell.

|

||||

|

||||

I'm not bothering with other operating systems.

|

||||

|

||||

### The relay is a full nostr citizen with it's own public and private keys.

|

||||

|

||||

For example, you can see my relay's profile (wss://relay.laantungir.net) running here:

|

||||

|

||||

[Primal link](https://primal.net/p/nprofile1qqswn2jsmm8lq8evas0v9vhqkdpn9nuujt90mtz60nqgsxndy66es4qjjnhr7)

|

||||

|

||||

What this means in practice is that when you start the relay, it generates keys for itself, and for it's administrator (You can specify these if you wish)

|

||||

|

||||

Now the program and the administrator can have verifed communication between the two, simply by using nostr events. For example, the administrator can send DMs to the relay, asking it's status, and changing it's configuration through any client that can handle nip17 DMs. The relay can also send notifications to the administrator about it's current status, or it can publish it's status on a regular schedule directly to NOSTR as kind-1 notes.

|

||||

|

||||

Also included is a more standard administrative web front end. This front end communicates to the relay using an extensive api, which again is nostr events signed by the administrator. You can completely control the relay through signed nostr events.

|

||||

|

||||

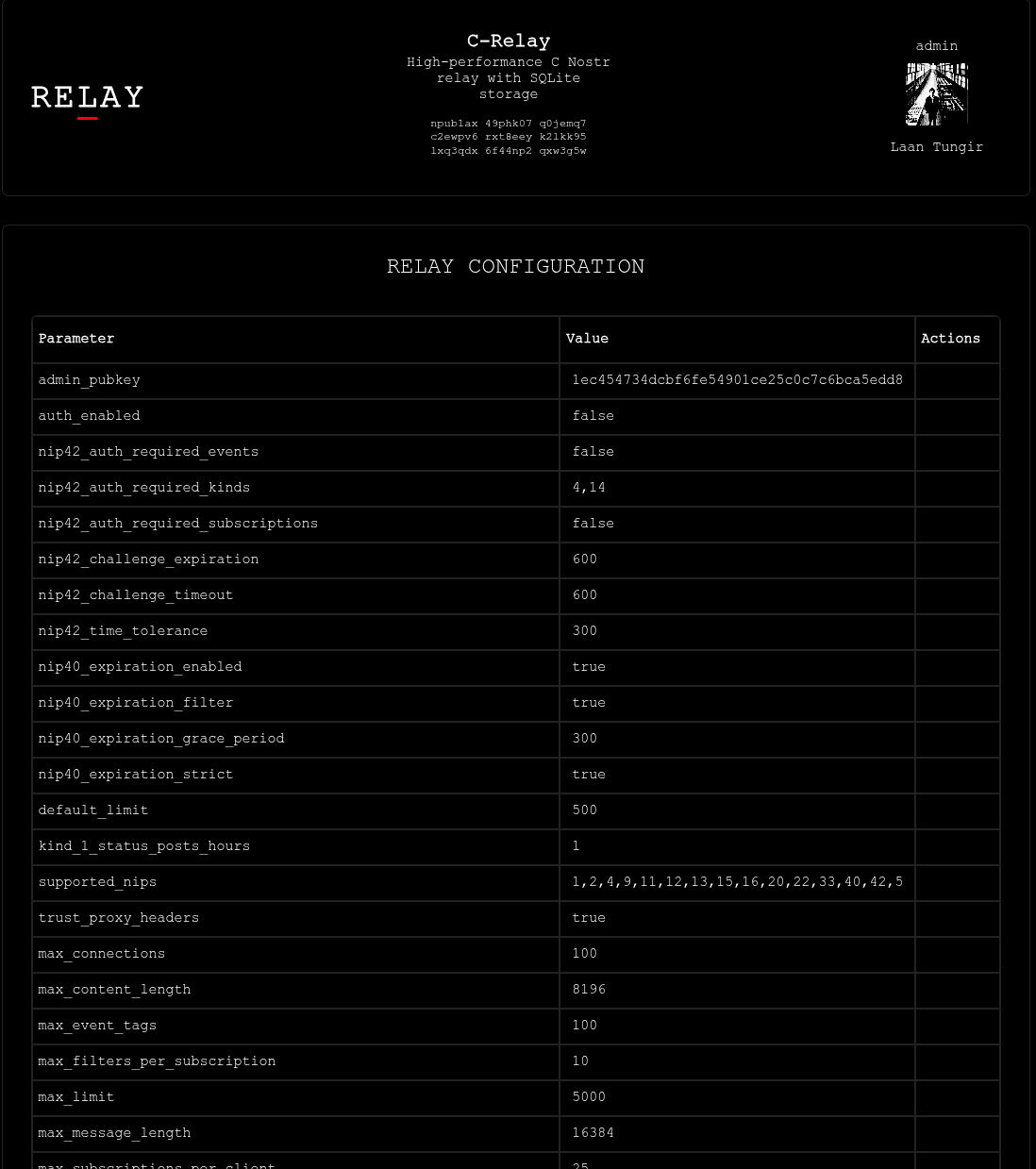

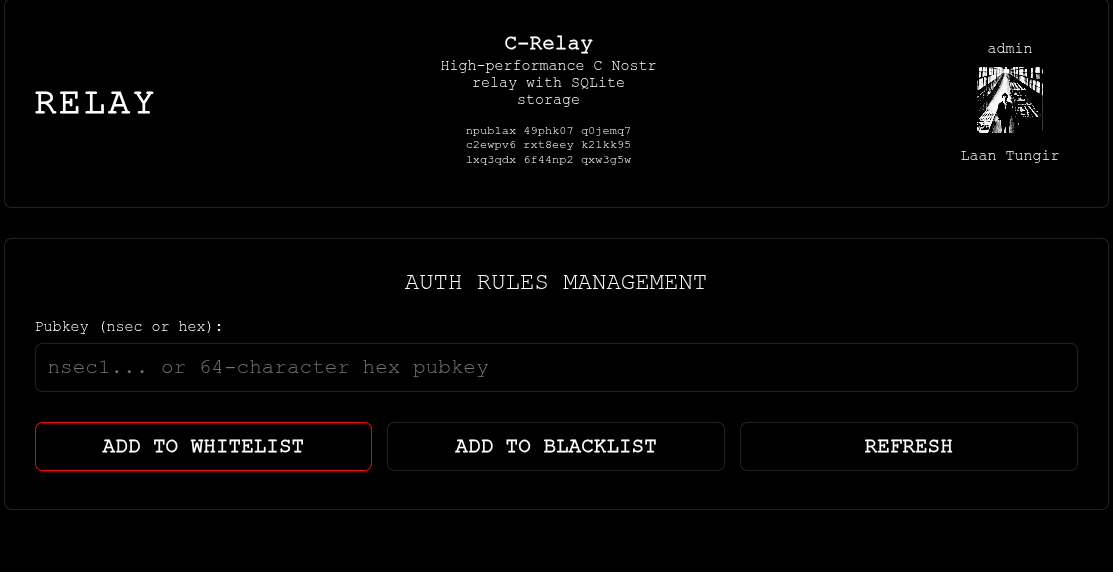

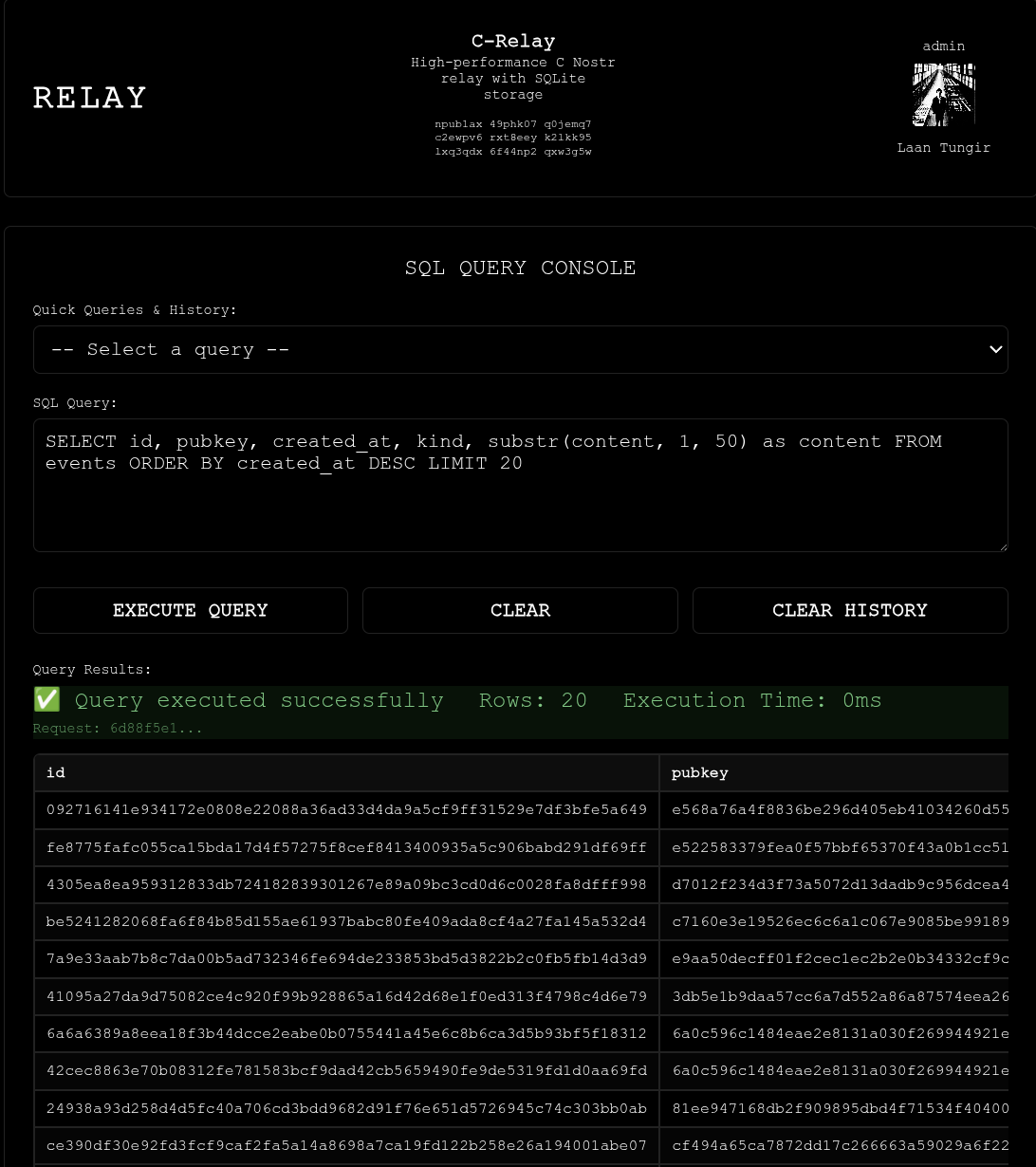

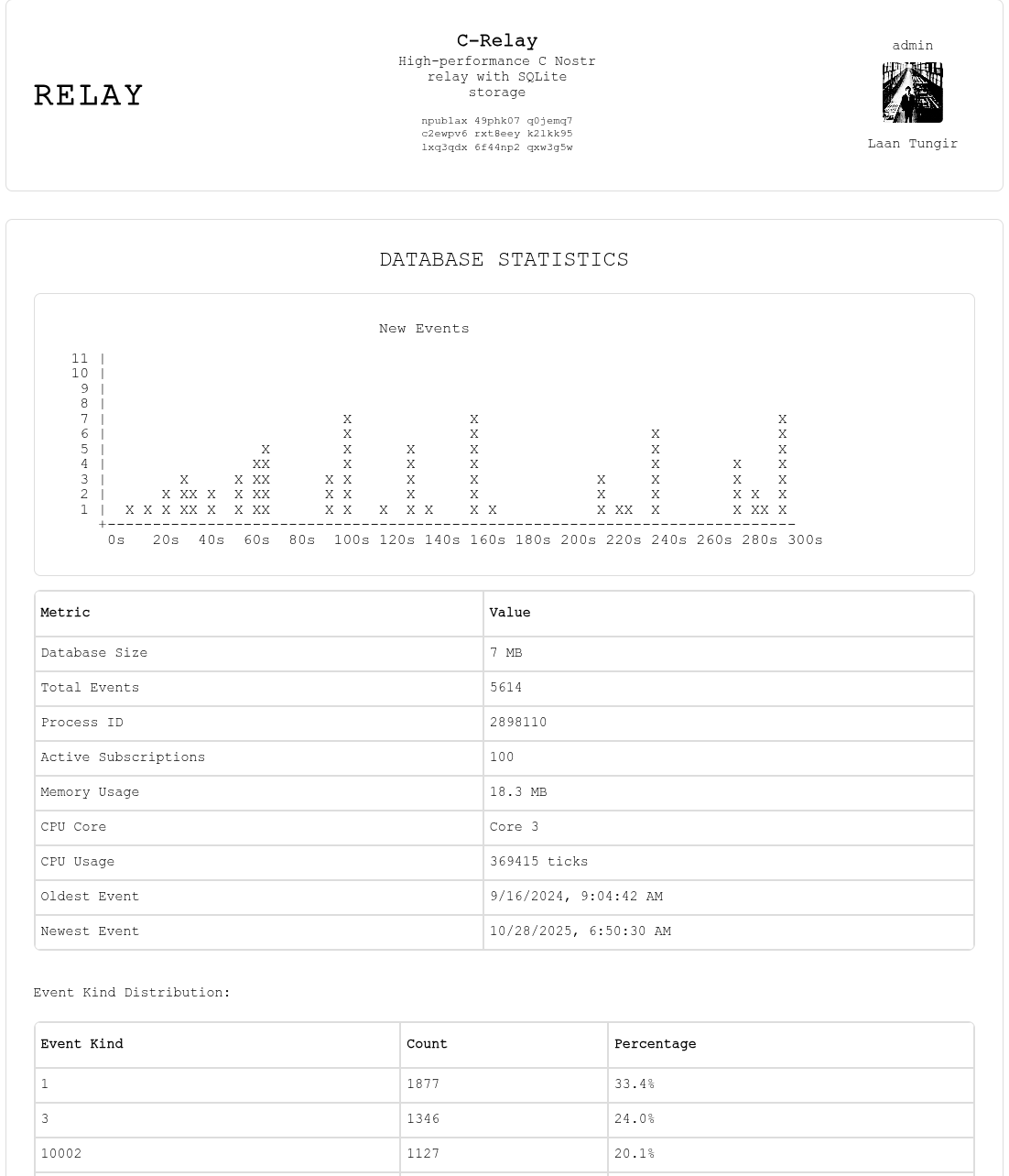

## Screenshots

|

||||

|

||||

|

||||

Main page with real time updates.

|

||||

|

||||

|

||||

Set your configuration preferences.

|

||||

|

||||

|

||||

View current subscriptions

|

||||

|

||||

|

||||

Add npubs to white or black lists.

|

||||

|

||||

|

||||

Run sql queries on the database.

|

||||

|

||||

|

||||

Light mode.

|

||||

|

||||

65

README.md

@@ -195,6 +195,9 @@ All commands are sent as NIP-44 encrypted JSON arrays in the event content. The

|

||||

- `pow_min_difficulty`: Minimum proof-of-work difficulty

|

||||

- `nip40_expiration_enabled`: Enable event expiration (`true`/`false`)

|

||||

|

||||

**Monitoring Settings:**

|

||||

- `kind_24567_reporting_throttle_sec`: Minimum seconds between monitoring events (default: 5)

|

||||

|

||||

### Dynamic Configuration Updates

|

||||

|

||||

C-Relay supports **dynamic configuration updates** without requiring a restart for most settings. Configuration parameters are categorized as either **dynamic** (can be updated immediately) or **restart-required** (require relay restart to take effect).

|

||||

@@ -391,6 +394,68 @@ SELECT

|

||||

|

||||

|

||||

|

||||

## Real-time Monitoring System

|

||||

|

||||

C-Relay includes a subscription-based monitoring system that broadcasts real-time relay statistics using ephemeral events (kind 24567).

|

||||

|

||||

### Activation

|

||||

|

||||

The monitoring system activates automatically when clients subscribe to kind 24567 events:

|

||||

|

||||

```json

|

||||

["REQ", "monitoring-sub", {"kinds": [24567]}]

|

||||

```

|

||||

|

||||

For specific monitoring types, use d-tag filters:

|

||||

|

||||

```json

|

||||

["REQ", "event-kinds-sub", {"kinds": [24567], "#d": ["event_kinds"]}]

|

||||

["REQ", "time-stats-sub", {"kinds": [24567], "#d": ["time_stats"]}]

|

||||

["REQ", "top-pubkeys-sub", {"kinds": [24567], "#d": ["top_pubkeys"]}]

|

||||

```

|

||||

|

||||

When no subscriptions exist, monitoring is dormant to conserve resources.

|

||||

|

||||

### Monitoring Event Types

|

||||

|

||||

| Type | d Tag | Description |

|

||||

|------|-------|-------------|

|

||||

| Event Distribution | `event_kinds` | Event count by kind with percentages |

|

||||

| Time Statistics | `time_stats` | Events in last 24h, 7d, 30d |

|

||||

| Top Publishers | `top_pubkeys` | Top 10 pubkeys by event count |

|

||||

| Active Subscriptions | `active_subscriptions` | Current subscription details (admin only) |

|

||||

| Subscription Details | `subscription_details` | Detailed subscription info (admin only) |

|

||||

| CPU Metrics | `cpu_metrics` | Process CPU and memory usage |

|

||||

|

||||

### Event Structure

|

||||

|

||||

```json

|

||||

{

|

||||

"kind": 24567,

|

||||

"pubkey": "<relay_pubkey>",

|

||||

"created_at": <timestamp>,

|

||||

"content": "{\"data_type\":\"event_kinds\",\"timestamp\":1234567890,...}",

|

||||

"tags": [

|

||||

["d", "event_kinds"]

|

||||

]

|

||||

}

|

||||

```

|

||||

|

||||

### Configuration

|

||||

|

||||

- `kind_24567_reporting_throttle_sec`: Minimum seconds between monitoring events (default: 5)

|

||||

|

||||

### Web Dashboard Integration

|

||||

|

||||

The built-in web dashboard (`/api/`) automatically subscribes to monitoring events and displays real-time statistics.

|

||||

|

||||

### Performance Considerations

|

||||

|

||||

- Monitoring events are ephemeral (not stored in database)

|

||||

- Throttling prevents excessive event generation

|

||||

- Automatic activation/deactivation based on subscriptions

|

||||

- Minimal overhead when no clients are monitoring

|

||||

|

||||

## Direct Messaging Admin System

|

||||

|

||||

In addition to the above admin API, c-relay allows the administrator to direct message the relay to get information or control some settings. As long as the administrator is signed in with any nostr client that allows sending nip-17 direct messages (DMs), they can control the relay.

|

||||

|

||||

612

STATIC_MUSL_GUIDE.md

Normal file

@@ -0,0 +1,612 @@

|

||||

# Static MUSL Build Guide for C Programs

|

||||

|

||||

## Overview

|

||||

|

||||

This guide explains how to build truly portable static binaries using Alpine Linux and MUSL libc. These binaries have **zero runtime dependencies** and work on any Linux distribution without modification.

|

||||

|

||||

This guide is specifically tailored for C programs that use:

|

||||

- **nostr_core_lib** - Nostr protocol implementation

|

||||

- **nostr_login_lite** - Nostr authentication library

|

||||

- Common dependencies: libwebsockets, OpenSSL, SQLite, curl, secp256k1

|

||||

|

||||

## Why MUSL Static Binaries?

|

||||

|

||||

### Advantages Over glibc

|

||||

|

||||

| Feature | MUSL Static | glibc Static | glibc Dynamic |

|

||||

|---------|-------------|--------------|---------------|

|

||||

| **Portability** | ✓ Any Linux | ⚠ glibc only | ✗ Requires matching libs |

|

||||

| **Binary Size** | ~7-10 MB | ~12-15 MB | ~2-3 MB |

|

||||

| **Dependencies** | None | NSS libs | Many system libs |

|

||||

| **Deployment** | Single file | Single file + NSS | Binary + libraries |

|

||||

| **Compatibility** | Universal | glibc version issues | Library version hell |

|

||||

|

||||

### Key Benefits

|

||||

|

||||

1. **True Portability**: Works on Alpine, Ubuntu, Debian, CentOS, Arch, etc.

|

||||

2. **No Library Hell**: No `GLIBC_2.XX not found` errors

|

||||

3. **Simple Deployment**: Just copy one file

|

||||

4. **Reproducible Builds**: Same Docker image = same binary

|

||||

5. **Security**: No dependency on system libraries with vulnerabilities

|

||||

|

||||

## Quick Start

|

||||

|

||||

### Prerequisites

|

||||

|

||||

- Docker installed and running

|

||||

- Your C project with source code

|

||||

- Internet connection for downloading dependencies

|

||||

|

||||

### Basic Build Process

|

||||

|

||||

```bash

|

||||

# 1. Copy the Dockerfile template (see below)

|

||||

cp /path/to/c-relay/Dockerfile.alpine-musl ./Dockerfile.static

|

||||

|

||||

# 2. Customize for your project (see Customization section)

|

||||

vim Dockerfile.static

|

||||

|

||||

# 3. Build the static binary

|

||||

docker build --platform linux/amd64 -f Dockerfile.static -t my-app-builder .

|

||||

|

||||

# 4. Extract the binary

|

||||

docker create --name temp-container my-app-builder

|

||||

docker cp temp-container:/build/my_app_static ./my_app_static

|

||||

docker rm temp-container

|

||||

|

||||

# 5. Verify it's static

|

||||

ldd ./my_app_static # Should show "not a dynamic executable"

|

||||

```

|

||||

|

||||

## Dockerfile Template

|

||||

|

||||

Here's a complete Dockerfile template you can customize for your project:

|

||||

|

||||

```dockerfile

|

||||

# Alpine-based MUSL static binary builder

|

||||

# Produces truly portable binaries with zero runtime dependencies

|

||||

|

||||

FROM alpine:3.19 AS builder

|

||||

|

||||

# Install build dependencies

|

||||

RUN apk add --no-cache \

|

||||

build-base \

|

||||

musl-dev \

|

||||

git \

|

||||

cmake \

|

||||

pkgconfig \

|

||||

autoconf \

|

||||

automake \

|

||||

libtool \

|

||||

openssl-dev \

|

||||

openssl-libs-static \

|

||||

zlib-dev \

|

||||

zlib-static \

|

||||

curl-dev \

|

||||

curl-static \

|

||||

sqlite-dev \

|

||||

sqlite-static \

|

||||

linux-headers \

|

||||

wget \

|

||||

bash

|

||||

|

||||

WORKDIR /build

|

||||

|

||||

# Build libsecp256k1 static (required for Nostr)

|

||||

RUN cd /tmp && \

|

||||

git clone https://github.com/bitcoin-core/secp256k1.git && \

|

||||

cd secp256k1 && \

|

||||

./autogen.sh && \

|

||||

./configure --enable-static --disable-shared --prefix=/usr \

|

||||

CFLAGS="-fPIC" && \

|

||||

make -j$(nproc) && \

|

||||

make install && \

|

||||

rm -rf /tmp/secp256k1

|

||||

|

||||

# Build libwebsockets static (if needed for WebSocket support)

|

||||

RUN cd /tmp && \

|

||||

git clone --depth 1 --branch v4.3.3 https://github.com/warmcat/libwebsockets.git && \

|

||||

cd libwebsockets && \

|

||||

mkdir build && cd build && \

|

||||

cmake .. \

|

||||

-DLWS_WITH_STATIC=ON \

|

||||

-DLWS_WITH_SHARED=OFF \

|

||||

-DLWS_WITH_SSL=ON \

|

||||

-DLWS_WITHOUT_TESTAPPS=ON \

|

||||

-DLWS_WITHOUT_TEST_SERVER=ON \

|

||||

-DLWS_WITHOUT_TEST_CLIENT=ON \

|

||||

-DLWS_WITHOUT_TEST_PING=ON \

|

||||

-DLWS_WITH_HTTP2=OFF \

|

||||

-DLWS_WITH_LIBUV=OFF \

|

||||

-DLWS_WITH_LIBEVENT=OFF \

|

||||

-DLWS_IPV6=ON \

|

||||

-DCMAKE_BUILD_TYPE=Release \

|

||||

-DCMAKE_INSTALL_PREFIX=/usr \

|

||||

-DCMAKE_C_FLAGS="-fPIC" && \

|

||||

make -j$(nproc) && \

|

||||

make install && \

|

||||

rm -rf /tmp/libwebsockets

|

||||

|

||||

# Copy git configuration for submodules

|

||||

COPY .gitmodules /build/.gitmodules

|

||||

COPY .git /build/.git

|

||||

|

||||

# Initialize submodules

|

||||

RUN git submodule update --init --recursive

|

||||

|

||||

# Copy and build nostr_core_lib

|

||||

COPY nostr_core_lib /build/nostr_core_lib/

|

||||

RUN cd nostr_core_lib && \

|

||||

chmod +x build.sh && \

|

||||

sed -i 's/CFLAGS="-Wall -Wextra -std=c99 -fPIC -O2"/CFLAGS="-U_FORTIFY_SOURCE -D_FORTIFY_SOURCE=0 -Wall -Wextra -std=c99 -fPIC -O2"/' build.sh && \

|

||||

rm -f *.o *.a 2>/dev/null || true && \

|

||||

./build.sh --nips=1,6,13,17,19,44,59

|

||||

|

||||

# Copy and build nostr_login_lite (if used)

|

||||

# COPY nostr_login_lite /build/nostr_login_lite/

|

||||

# RUN cd nostr_login_lite && make static

|

||||

|

||||

# Copy your application source

|

||||

COPY src/ /build/src/

|

||||

COPY Makefile /build/Makefile

|

||||

|

||||

# Build your application with full static linking

|

||||

RUN gcc -static -O2 -Wall -Wextra -std=c99 \

|

||||

-U_FORTIFY_SOURCE -D_FORTIFY_SOURCE=0 \

|

||||

-I. -Inostr_core_lib -Inostr_core_lib/nostr_core \

|

||||

-Inostr_core_lib/cjson -Inostr_core_lib/nostr_websocket \

|

||||

src/*.c \

|

||||

-o /build/my_app_static \

|

||||

nostr_core_lib/libnostr_core_x64.a \

|

||||

-lwebsockets -lssl -lcrypto -lsqlite3 -lsecp256k1 \

|

||||

-lcurl -lz -lpthread -lm -ldl && \

|

||||

strip /build/my_app_static

|

||||

|

||||

# Verify it's truly static

|

||||

RUN echo "=== Binary Information ===" && \

|

||||

file /build/my_app_static && \

|

||||

ls -lh /build/my_app_static && \

|

||||

echo "=== Checking for dynamic dependencies ===" && \

|

||||

(ldd /build/my_app_static 2>&1 || echo "Binary is static")

|

||||

|

||||

# Output stage - just the binary

|

||||

FROM scratch AS output

|

||||

COPY --from=builder /build/my_app_static /my_app_static

|

||||

```

|

||||

|

||||

## Customization Guide

|

||||

|

||||

### 1. Adjust Dependencies

|

||||

|

||||

**Add dependencies** by modifying the `apk add` section:

|

||||

|

||||

```dockerfile

|

||||

RUN apk add --no-cache \

|

||||

build-base \

|

||||

musl-dev \

|

||||

# Add your dependencies here:

|

||||

libpng-dev \

|

||||

libpng-static \

|

||||

libjpeg-turbo-dev \

|

||||

libjpeg-turbo-static

|

||||

```

|

||||

|

||||

**Remove unused dependencies** to speed up builds:

|

||||

- Remove `libwebsockets` section if you don't need WebSocket support

|

||||

- Remove `sqlite` if you don't use databases

|

||||

- Remove `curl` if you don't make HTTP requests

|

||||

|

||||

### 2. Configure nostr_core_lib NIPs

|

||||

|

||||

Specify which NIPs your application needs:

|

||||

|

||||

```bash

|

||||

./build.sh --nips=1,6,19 # Minimal: Basic protocol, keys, bech32

|

||||

./build.sh --nips=1,6,13,17,19,44,59 # Full: All common NIPs

|

||||

./build.sh --nips=all # Everything available

|

||||

```

|

||||

|

||||

**Common NIP combinations:**

|

||||

- **Basic client**: `1,6,19` (events, keys, bech32)

|

||||

- **With encryption**: `1,6,19,44` (add modern encryption)

|

||||

- **With DMs**: `1,6,17,19,44,59` (add private messages)

|

||||

- **Relay/server**: `1,6,13,17,19,42,44,59` (add PoW, auth)

|

||||

|

||||

### 3. Modify Compilation Flags

|

||||

|

||||

**For your application:**

|

||||

|

||||

```dockerfile

|

||||

RUN gcc -static -O2 -Wall -Wextra -std=c99 \

|

||||

-U_FORTIFY_SOURCE -D_FORTIFY_SOURCE=0 \ # REQUIRED for MUSL

|

||||

-I. -Inostr_core_lib \ # Include paths

|

||||

src/*.c \ # Your source files

|

||||

-o /build/my_app_static \ # Output binary

|

||||

nostr_core_lib/libnostr_core_x64.a \ # Nostr library

|

||||

-lwebsockets -lssl -lcrypto \ # Link libraries

|

||||

-lsqlite3 -lsecp256k1 -lcurl \

|

||||

-lz -lpthread -lm -ldl

|

||||

```

|

||||

|

||||

**Debug build** (with symbols, no optimization):

|

||||

|

||||

```dockerfile

|

||||

RUN gcc -static -g -O0 -DDEBUG \

|

||||

-U_FORTIFY_SOURCE -D_FORTIFY_SOURCE=0 \

|

||||

# ... rest of flags

|

||||

```

|

||||

|

||||

### 4. Multi-Architecture Support

|

||||

|

||||

Build for different architectures:

|

||||

|

||||

```bash

|

||||

# x86_64 (Intel/AMD)

|

||||

docker build --platform linux/amd64 -f Dockerfile.static -t my-app-x86 .

|

||||

|

||||

# ARM64 (Apple Silicon, Raspberry Pi 4+)

|

||||

docker build --platform linux/arm64 -f Dockerfile.static -t my-app-arm64 .

|

||||

```

|

||||

|

||||

## Build Script Template

|

||||

|

||||

Create a `build_static.sh` script for convenience:

|

||||

|

||||

```bash

|

||||

#!/bin/bash

|

||||

set -e

|

||||

|

||||

SCRIPT_DIR="$(cd "$(dirname "${BASH_SOURCE[0]}")" && pwd)"

|

||||

BUILD_DIR="$SCRIPT_DIR/build"

|

||||

DOCKERFILE="$SCRIPT_DIR/Dockerfile.static"

|

||||

|

||||

# Detect architecture

|

||||

ARCH=$(uname -m)

|

||||

case "$ARCH" in

|

||||

x86_64)

|

||||

PLATFORM="linux/amd64"

|

||||

OUTPUT_NAME="my_app_static_x86_64"

|

||||

;;

|

||||

aarch64|arm64)

|

||||

PLATFORM="linux/arm64"

|

||||

OUTPUT_NAME="my_app_static_arm64"

|

||||

;;

|

||||

*)

|

||||

echo "Unknown architecture: $ARCH"

|

||||

exit 1

|

||||

;;

|

||||

esac

|

||||

|

||||

echo "Building for platform: $PLATFORM"

|

||||

mkdir -p "$BUILD_DIR"

|

||||

|

||||

# Build Docker image

|

||||

docker build \

|

||||

--platform "$PLATFORM" \

|

||||

-f "$DOCKERFILE" \

|

||||

-t my-app-builder:latest \

|

||||

--progress=plain \

|

||||

.

|

||||

|

||||

# Extract binary

|

||||

CONTAINER_ID=$(docker create my-app-builder:latest)

|

||||

docker cp "$CONTAINER_ID:/build/my_app_static" "$BUILD_DIR/$OUTPUT_NAME"

|

||||

docker rm "$CONTAINER_ID"

|

||||

|

||||

chmod +x "$BUILD_DIR/$OUTPUT_NAME"

|

||||

|

||||

echo "✓ Build complete: $BUILD_DIR/$OUTPUT_NAME"

|

||||

echo "✓ Size: $(du -h "$BUILD_DIR/$OUTPUT_NAME" | cut -f1)"

|

||||

|

||||

# Verify

|

||||

if ldd "$BUILD_DIR/$OUTPUT_NAME" 2>&1 | grep -q "not a dynamic executable"; then

|

||||

echo "✓ Binary is fully static"

|

||||

else

|

||||

echo "⚠ Warning: Binary may have dynamic dependencies"

|

||||

fi

|

||||

```

|

||||

|

||||

Make it executable:

|

||||

|

||||

```bash

|

||||

chmod +x build_static.sh

|

||||

./build_static.sh

|

||||

```

|

||||

|

||||

## Common Issues and Solutions

|

||||

|

||||

### Issue 1: Fortification Errors

|

||||

|

||||

**Error:**

|

||||

```

|

||||

undefined reference to '__snprintf_chk'

|

||||

undefined reference to '__fprintf_chk'

|

||||

```

|

||||

|

||||

**Cause**: GCC's `-O2` enables fortification by default, which uses glibc-specific functions.

|

||||

|

||||

**Solution**: Add these flags to **all** compilation commands:

|

||||

```bash

|

||||

-U_FORTIFY_SOURCE -D_FORTIFY_SOURCE=0

|

||||

```

|

||||

|

||||

This must be applied to:

|

||||

1. nostr_core_lib build.sh

|

||||

2. Your application compilation

|

||||

3. Any other libraries you build

|

||||

|

||||

### Issue 2: Missing Symbols from nostr_core_lib

|

||||

|

||||

**Error:**

|

||||

```

|

||||

undefined reference to 'nostr_create_event'

|

||||

undefined reference to 'nostr_sign_event'

|

||||

```

|

||||

|

||||

**Cause**: Required NIPs not included in nostr_core_lib build.

|

||||

|

||||

**Solution**: Add missing NIPs:

|

||||

```bash

|

||||

./build.sh --nips=1,6,19 # Add the NIPs you need

|

||||

```

|

||||

|

||||

### Issue 3: Docker Permission Denied

|

||||

|

||||

**Error:**

|

||||

```

|

||||

permission denied while trying to connect to the Docker daemon socket

|

||||

```

|

||||

|

||||

**Solution**:

|

||||

```bash

|

||||

sudo usermod -aG docker $USER

|

||||

newgrp docker # Or logout and login

|

||||

```

|

||||

|

||||

### Issue 4: Binary Won't Run on Target System

|

||||

|

||||

**Checks**:

|

||||

```bash

|

||||

# 1. Verify it's static

|

||||

ldd my_app_static # Should show "not a dynamic executable"

|

||||

|

||||

# 2. Check architecture

|

||||

file my_app_static # Should match target system

|

||||

|

||||

# 3. Test on different distributions

|

||||

docker run --rm -v $(pwd):/app alpine:latest /app/my_app_static --version

|

||||

docker run --rm -v $(pwd):/app ubuntu:latest /app/my_app_static --version

|

||||

```

|

||||

|

||||

## Project Structure Example

|

||||

|

||||

Organize your project for easy static builds:

|

||||

|

||||

```

|

||||

my-nostr-app/

|

||||

├── src/

|

||||

│ ├── main.c

|

||||

│ ├── handlers.c

|

||||

│ └── utils.c

|

||||

├── nostr_core_lib/ # Git submodule

|

||||

├── nostr_login_lite/ # Git submodule (if used)

|

||||

├── Dockerfile.static # Static build Dockerfile

|

||||

├── build_static.sh # Build script

|

||||

├── Makefile # Regular build

|

||||

└── README.md

|

||||

```

|

||||

|

||||

### Makefile Integration

|

||||

|

||||

Add static build targets to your Makefile:

|

||||

|

||||

```makefile

|

||||

# Regular dynamic build

|

||||

all: my_app

|

||||

|

||||

my_app: src/*.c

|

||||

gcc -O2 src/*.c -o my_app \

|

||||

nostr_core_lib/libnostr_core_x64.a \

|

||||

-lssl -lcrypto -lsecp256k1 -lz -lpthread -lm

|

||||

|

||||

# Static MUSL build via Docker

|

||||

static:

|

||||

./build_static.sh

|

||||

|

||||

# Clean

|

||||

clean:

|

||||

rm -f my_app build/my_app_static_*

|

||||

|

||||

.PHONY: all static clean

|

||||

```

|

||||

|

||||

## Deployment

|

||||

|

||||

### Single Binary Deployment

|

||||

|

||||

```bash

|

||||

# Copy to server

|

||||

scp build/my_app_static_x86_64 user@server:/opt/my-app/

|

||||

|

||||

# Run (no dependencies needed!)

|

||||

ssh user@server

|

||||

/opt/my-app/my_app_static_x86_64

|

||||

```

|

||||

|

||||

### SystemD Service

|

||||

|

||||

```ini

|

||||

[Unit]

|

||||

Description=My Nostr Application

|

||||

After=network.target

|

||||

|

||||

[Service]

|

||||

Type=simple

|

||||

User=myapp

|

||||

WorkingDirectory=/opt/my-app

|

||||

ExecStart=/opt/my-app/my_app_static_x86_64

|

||||

Restart=always

|

||||

RestartSec=5

|

||||

|

||||

[Install]

|

||||

WantedBy=multi-user.target

|

||||

```

|

||||

|

||||

### Docker Container (Minimal)

|

||||

|

||||

```dockerfile

|

||||

FROM scratch

|

||||

COPY my_app_static_x86_64 /app

|

||||

ENTRYPOINT ["/app"]

|

||||

```

|

||||

|

||||

Build and run:

|

||||

```bash

|

||||

docker build -t my-app:latest .

|

||||

docker run --rm my-app:latest --help

|

||||

```

|

||||

|

||||

## Reusing c-relay Files

|

||||

|

||||

You can directly copy these files from c-relay:

|

||||

|

||||

### 1. Dockerfile.alpine-musl

|

||||

```bash

|

||||

cp /path/to/c-relay/Dockerfile.alpine-musl ./Dockerfile.static

|

||||

```

|

||||

|

||||

Then customize:

|

||||

- Change binary name (line 125)

|

||||

- Adjust source files (line 122-124)

|

||||

- Modify include paths (line 120-121)

|

||||

|

||||

### 2. build_static.sh

|

||||

```bash

|

||||

cp /path/to/c-relay/build_static.sh ./

|

||||

```

|

||||

|

||||

Then customize:

|

||||

- Change `OUTPUT_NAME` variable (lines 66, 70)

|

||||

- Update Docker image name (line 98)

|

||||

- Modify verification commands (lines 180-184)

|

||||

|

||||

### 3. .dockerignore (Optional)

|

||||

```bash

|

||||

cp /path/to/c-relay/.dockerignore ./

|

||||

```

|

||||

|

||||

Helps speed up Docker builds by excluding unnecessary files.

|

||||

|

||||

## Best Practices

|

||||

|

||||

1. **Version Control**: Commit your Dockerfile and build script

|

||||

2. **Tag Builds**: Include git commit hash in binary version

|

||||

3. **Test Thoroughly**: Verify on multiple distributions

|

||||

4. **Document Dependencies**: List required NIPs and libraries

|

||||

5. **Automate**: Use CI/CD to build on every commit

|

||||

6. **Archive Binaries**: Keep old versions for rollback

|

||||

|

||||

## Performance Comparison

|

||||

|

||||

| Metric | MUSL Static | glibc Dynamic |

|

||||

|--------|-------------|---------------|

|

||||

| Binary Size | 7-10 MB | 2-3 MB + libs |

|

||||

| Startup Time | ~50ms | ~40ms |

|

||||

| Memory Usage | Similar | Similar |

|

||||

| Portability | ✓ Universal | ✗ System-dependent |

|

||||

| Deployment | Single file | Binary + libraries |

|

||||

|

||||

## References

|

||||

|

||||

- [MUSL libc](https://musl.libc.org/)

|

||||

- [Alpine Linux](https://alpinelinux.org/)

|

||||

- [nostr_core_lib](https://github.com/chebizarro/nostr_core_lib)

|

||||

- [Static Linking Best Practices](https://www.musl-libc.org/faq.html)

|

||||

- [c-relay Implementation](./docs/musl_static_build.md)

|

||||

|

||||

## Example: Minimal Nostr Client

|

||||

|

||||

Here's a complete example of building a minimal Nostr client:

|

||||

|

||||

```c

|

||||

// minimal_client.c

|

||||

#include "nostr_core/nostr_core.h"

|

||||

#include <stdio.h>

|

||||

|

||||

int main() {

|

||||

// Generate keypair

|

||||

char nsec[64], npub[64];

|

||||

nostr_generate_keypair(nsec, npub);

|

||||

|

||||

printf("Generated keypair:\n");

|

||||

printf("Private key (nsec): %s\n", nsec);

|

||||

printf("Public key (npub): %s\n", npub);

|

||||

|

||||

// Create event

|

||||

cJSON *event = nostr_create_event(1, "Hello, Nostr!", NULL);

|

||||

nostr_sign_event(event, nsec);

|

||||

|

||||

char *json = cJSON_Print(event);

|

||||

printf("\nSigned event:\n%s\n", json);

|

||||

|

||||

free(json);

|

||||

cJSON_Delete(event);

|

||||

return 0;

|

||||

}

|

||||

```

|

||||

|

||||

**Dockerfile.static:**

|

||||

```dockerfile

|

||||

FROM alpine:3.19 AS builder

|

||||

RUN apk add --no-cache build-base musl-dev git autoconf automake libtool \

|

||||

openssl-dev openssl-libs-static zlib-dev zlib-static

|

||||

|

||||

WORKDIR /build

|

||||

|

||||

# Build secp256k1

|

||||

RUN cd /tmp && git clone https://github.com/bitcoin-core/secp256k1.git && \

|

||||

cd secp256k1 && ./autogen.sh && \

|

||||

./configure --enable-static --disable-shared --prefix=/usr CFLAGS="-fPIC" && \

|

||||

make -j$(nproc) && make install

|

||||

|

||||

# Copy and build nostr_core_lib

|

||||

COPY nostr_core_lib /build/nostr_core_lib/

|

||||

RUN cd nostr_core_lib && \

|

||||

sed -i 's/CFLAGS="-Wall/CFLAGS="-U_FORTIFY_SOURCE -D_FORTIFY_SOURCE=0 -Wall/' build.sh && \

|

||||

./build.sh --nips=1,6,19

|

||||

|

||||

# Build application

|

||||

COPY minimal_client.c /build/

|

||||

RUN gcc -static -O2 -Wall -std=c99 \

|

||||

-U_FORTIFY_SOURCE -D_FORTIFY_SOURCE=0 \

|

||||

-Inostr_core_lib -Inostr_core_lib/nostr_core -Inostr_core_lib/cjson \

|

||||

minimal_client.c -o /build/minimal_client_static \

|

||||

nostr_core_lib/libnostr_core_x64.a \

|

||||

-lssl -lcrypto -lsecp256k1 -lz -lpthread -lm -ldl && \

|

||||

strip /build/minimal_client_static

|

||||

|

||||

FROM scratch

|

||||

COPY --from=builder /build/minimal_client_static /minimal_client_static

|

||||

```

|

||||

|

||||

**Build and run:**

|

||||

```bash

|

||||

docker build -f Dockerfile.static -t minimal-client .

|

||||

docker create --name temp minimal-client

|

||||

docker cp temp:/minimal_client_static ./

|

||||

docker rm temp

|

||||

|

||||

./minimal_client_static

|

||||

```

|

||||

|

||||

## Conclusion

|

||||

|

||||

Static MUSL binaries provide the best portability for C applications. While they're slightly larger than dynamic binaries, the benefits of zero dependencies and universal compatibility make them ideal for:

|

||||

|

||||

- Server deployments across different Linux distributions

|

||||

- Embedded systems and IoT devices

|

||||

- Docker containers (FROM scratch)

|

||||

- Distribution to users without dependency management

|

||||

- Long-term archival and reproducibility

|

||||

|

||||

Follow this guide to create portable, self-contained binaries for your Nostr applications!

|

||||

243

api/index.css

@@ -285,7 +285,7 @@ h1 {

|

||||

border-bottom: var(--border-width) solid var(--border-color);

|

||||

padding-bottom: 10px;

|

||||

margin-bottom: 30px;

|

||||

font-weight: normal;

|

||||

font-weight: bold;

|

||||

font-size: 24px;

|

||||

font-family: var(--font-family);

|

||||

color: var(--primary-color);

|

||||

@@ -293,32 +293,57 @@ h1 {

|

||||

|

||||

h2 {

|

||||

font-weight: normal;

|

||||

padding-left: 10px;

|

||||

text-align: center;

|

||||

font-size: 16px;

|

||||

font-family: var(--font-family);

|

||||

color: var(--primary-color);

|

||||

}

|

||||

|

||||

h3 {

|

||||

font-weight: normal;

|

||||

font-size: 12px;

|

||||

font-family: var(--font-family);

|

||||

color: var(--primary-color);

|

||||

padding-bottom: 10px;

|

||||

}

|

||||

|

||||

label {

|

||||

display: block;

|

||||

margin-bottom: 5px;

|

||||

font-weight: lighter;

|

||||

font-size: 10px;

|

||||

font-family: var(--font-family);

|

||||

color: var(--primary-color);

|

||||

}

|

||||

|

||||

|

||||

|

||||

|

||||

.section {

|

||||

background: var(--secondary-color);

|

||||

border: var(--border-width) solid var(--border-color);

|

||||

border-radius: var(--border-radius);

|

||||

padding: 20px;

|

||||

margin-bottom: 20px;

|

||||

margin-left: 5px;

|

||||

margin-right:5px;

|

||||

}

|

||||

|

||||

.section-header {

|

||||

display: flex;

|

||||

justify-content: center;

|

||||

align-items: center;

|

||||

padding-bottom: 15px;

|

||||

}

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

.input-group {

|

||||

margin-bottom: 15px;

|

||||

}

|

||||

|

||||

label {

|

||||

display: block;

|

||||

margin-bottom: 5px;

|

||||

font-weight: bold;

|

||||

font-size: 14px;

|

||||

font-family: var(--font-family);

|

||||

color: var(--primary-color);

|

||||

}

|

||||

|

||||

input,

|

||||

textarea,

|

||||

@@ -491,6 +516,24 @@ button:disabled {

|

||||

border-radius: 0;

|

||||

}

|

||||

|

||||

/* Relay Events Styles */

|

||||

.status-message {

|

||||

margin-top: 10px;

|

||||

padding: 8px;

|

||||

border-radius: var(--border-radius);

|

||||

font-size: 14px;

|

||||

font-family: var(--font-family);

|

||||

text-align: center;

|

||||

}

|

||||

|

||||

.relay-entry {

|

||||

border: var(--border-width) solid var(--border-color);

|

||||

border-radius: var(--border-radius);

|

||||

padding: 10px;

|

||||

margin-bottom: 10px;

|

||||

background: var(--secondary-color);

|

||||

}

|

||||

|

||||

.config-value-input:focus {

|

||||

border: 1px solid var(--accent-color);

|

||||

background: var(--secondary-color);

|

||||

@@ -660,14 +703,7 @@ button:disabled {

|

||||

display: none;

|

||||

}

|

||||

|

||||

.section-header {

|

||||

display: flex;

|

||||

justify-content: space-between;

|

||||

align-items: center;

|

||||

/* margin-bottom: 15px; */

|

||||

/* border-bottom: var(--border-width) solid var(--border-color); */

|

||||

/* padding-bottom: 10px; */

|

||||

}

|

||||

|

||||

|

||||

.countdown-btn {

|

||||

width: auto;

|

||||

@@ -948,10 +984,8 @@ button:disabled {

|

||||

padding: 6px 8px;

|

||||

text-align: left;

|

||||

font-family: var(--font-family);

|

||||

max-width: 200px;

|

||||

overflow: hidden;

|

||||

text-overflow: ellipsis;

|

||||

white-space: nowrap;

|

||||

min-width: 100px;

|

||||

}

|

||||

|

||||

.sql-results-table th {

|

||||

@@ -1107,3 +1141,170 @@ body.dark-mode .sql-results-table tbody tr:nth-child(even) {

|

||||

border-radius: var(--border-radius);

|

||||

box-sizing: border-box;

|

||||

}

|

||||

|

||||

/* ================================

|

||||

SIDE NAVIGATION MENU

|

||||

================================ */

|

||||

|

||||

.side-nav {

|

||||

position: fixed;

|

||||

top: 0;

|

||||

left: -300px;

|

||||

width: 280px;

|

||||

height: 100vh;

|

||||

background: var(--secondary-color);

|

||||

border-right: var(--border-width) solid var(--border-color);

|

||||

z-index: 1000;

|

||||

transition: left 0.3s ease;

|

||||

overflow-y: auto;

|

||||

padding-top: 80px;

|

||||

}

|

||||

|

||||

.side-nav.open {

|

||||

left: 0;

|

||||

}

|

||||

|

||||

.side-nav-overlay {

|

||||

position: fixed;

|

||||

top: 0;

|

||||

left: 0;

|

||||

width: 100%;

|

||||

height: 100%;

|

||||

background: rgba(0, 0, 0, 0.5);

|

||||

z-index: 999;

|

||||

display: none;

|

||||

}

|

||||

|

||||

.side-nav-overlay.show {

|

||||

display: block;

|

||||

}

|

||||

|

||||

.nav-menu {

|

||||

list-style: none;

|

||||

padding: 0;

|

||||

margin: 0;

|

||||

}

|

||||

|

||||

.nav-menu li {

|

||||

border-bottom: var(--border-width) solid var(--muted-color);

|

||||

}

|

||||

|

||||

.nav-menu li:last-child {

|

||||

border-bottom: none;

|

||||

}

|

||||

|

||||

.nav-item {

|

||||

display: block;

|

||||

padding: 15px 20px;

|

||||

color: var(--primary-color);

|

||||

text-decoration: none;

|

||||

font-family: var(--font-family);

|

||||

font-size: 16px;

|

||||

font-weight: bold;

|

||||

transition: all 0.2s ease;

|

||||

cursor: pointer;

|

||||

border: 2px solid var(--secondary-color);

|

||||

background: none;

|

||||

width: 100%;

|

||||

text-align: left;

|

||||

}

|

||||

|

||||

.nav-item:hover {

|

||||

border: 2px solid var(--secondary-color);

|

||||

background:var(--muted-color);

|

||||

color: var(--accent-color);

|

||||

}

|

||||

|

||||

.nav-item.active {

|

||||

text-decoration: underline;

|

||||

padding-left: 16px;

|

||||

}

|

||||

|

||||

.nav-footer {

|

||||

position: absolute;

|

||||

bottom: 20px;

|

||||

left: 0;

|

||||

right: 0;

|

||||

padding: 0 20px;

|

||||

}

|

||||

|

||||

.nav-footer-btn {

|

||||

display: block;

|

||||

width: 100%;

|

||||

padding: 12px 20px;

|

||||

margin-bottom: 8px;

|

||||

color: var(--primary-color);

|

||||

|

||||

border: 1px solid var(--border-color);

|

||||

border-radius: 4px;

|

||||

font-family: var(--font-family);

|

||||

font-size: 14px;

|

||||

font-weight: bold;

|

||||

cursor: pointer;

|

||||

transition: all 0.2s ease;

|

||||

}

|

||||

|

||||

.nav-footer-btn:hover {

|

||||

background:var(--muted-color);

|

||||

border-color: var(--accent-color);

|

||||

}

|

||||

|

||||

.nav-footer-btn:last-child {

|

||||

margin-bottom: 0;

|

||||

}

|

||||

|

||||

.header-title.clickable {

|

||||

cursor: pointer;

|

||||

transition: all 0.2s ease;

|

||||

}

|

||||

|

||||

.header-title.clickable:hover {

|

||||

opacity: 0.8;

|

||||

}

|

||||

|

||||

/* ================================

|

||||

SUBSCRIPTION TABLE COLLAPSIBLE GROUPS

|

||||

================================ */

|

||||

|

||||

/* Subscription group header styles */

|

||||

.subscription-group-header {

|

||||

|

||||

font-weight: 500;

|

||||

|

||||

cursor: pointer;

|

||||

user-select: none;

|

||||

}

|

||||

|

||||

.subscription-group-header:hover {

|

||||

background-color: var(--secondary-color);

|

||||

}

|

||||

|

||||

.expand-icon {

|

||||

display: inline-block;

|

||||

width: 20px;

|

||||

transition: transform 0.2s ease;

|

||||

font-size: 12px;

|

||||

}

|

||||

|

||||

/* Detail row styles */

|

||||

.subscription-detail-row {

|

||||

/* background-color: var(--secondary-color); */

|

||||

}

|

||||

|

||||

.subscription-detail-row:hover {

|

||||

background-color: var(--muted-color);

|

||||

}

|

||||

|

||||

/* Detail row cell styles */

|

||||

.subscription-detail-prefix {

|

||||

padding-left: 30px;

|

||||

font-family: 'Courier New', monospace;

|

||||

font-size: 11px;

|

||||

color: var(--muted-color);

|

||||

}

|

||||

|

||||

.subscription-detail-id {

|

||||

font-family: 'Courier New', monospace;

|

||||

font-size: 12px;

|

||||

}

|

||||

|

||||

|

||||

137

api/index.html

@@ -9,11 +9,31 @@

|

||||

</head>

|

||||

|

||||

<body>

|

||||

<!-- Side Navigation Menu -->

|

||||

<nav class="side-nav" id="side-nav">

|

||||

<ul class="nav-menu">

|

||||

<li><button class="nav-item" data-page="statistics">Statistics</button></li>

|

||||

<li><button class="nav-item" data-page="subscriptions">Subscriptions</button></li>

|

||||

<li><button class="nav-item" data-page="configuration">Configuration</button></li>

|

||||

<li><button class="nav-item" data-page="authorization">Authorization</button></li>

|

||||

<li><button class="nav-item" data-page="relay-events">Relay Events</button></li>

|

||||

<li><button class="nav-item" data-page="dm">DM</button></li>

|

||||

<li><button class="nav-item" data-page="database">Database Query</button></li>

|

||||

</ul>

|

||||

<div class="nav-footer">

|

||||

<button class="nav-footer-btn" id="nav-dark-mode-btn">DARK MODE</button>

|

||||

<button class="nav-footer-btn" id="nav-logout-btn">LOGOUT</button>

|

||||

</div>

|

||||

</nav>

|

||||

|

||||

<!-- Side Navigation Overlay -->

|

||||

<div class="side-nav-overlay" id="side-nav-overlay"></div>

|

||||

|

||||

<!-- Header with title and profile display -->

|

||||

<div class="section">

|

||||

|

||||

<div class="header-content">

|

||||

<div class="header-title">

|

||||

<div class="header-title clickable" id="header-title">

|

||||

<span class="relay-letter" data-letter="R">R</span>

|

||||

<span class="relay-letter" data-letter="E">E</span>

|

||||

<span class="relay-letter" data-letter="L">L</span>

|

||||

@@ -34,10 +54,7 @@

|

||||

<span id="header-user-name" class="header-user-name">Loading...</span>

|

||||

</div>

|

||||

<!-- Logout dropdown -->

|

||||

<div class="logout-dropdown" id="logout-dropdown" style="display: none;">

|

||||

<button type="button" id="dark-mode-btn" class="logout-btn">🌙 DARK MODE</button>

|

||||

<button type="button" id="logout-btn" class="logout-btn">LOGOUT</button>

|

||||

</div>

|

||||

<!-- Dropdown menu removed - buttons moved to sidebar -->

|

||||

</div>

|

||||

</div>

|

||||

|

||||

@@ -51,12 +68,10 @@

|

||||

</div>

|

||||

|

||||

<!-- DATABASE STATISTICS Section -->

|

||||

<!-- Subscribe to kind 24567 events to receive real-time monitoring data -->

|

||||

<div class="section flex-section" id="databaseStatisticsSection" style="display: none;">

|

||||

<div class="section-header">

|

||||

<h2>DATABASE STATISTICS</h2>

|

||||

<!-- Monitoring toggle button will be inserted here by JavaScript -->

|

||||

<!-- Temporarily disable auto-refresh button for real-time monitoring -->

|

||||

<!-- <button type="button" id="refresh-stats-btn" class="countdown-btn"></button> -->

|

||||

DATABASE STATISTICS

|

||||

</div>

|

||||

|

||||

<!-- Event Rate Graph Container -->

|

||||

@@ -81,10 +96,26 @@

|

||||

<td>Total Events</td>

|

||||

<td id="total-events">-</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>Process ID</td>

|

||||

<td id="process-id">-</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>Active Subscriptions</td>

|

||||

<td id="active-subscriptions">-</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>Memory Usage</td>

|

||||

<td id="memory-usage">-</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>CPU Core</td>

|

||||

<td id="cpu-core">-</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>CPU Usage</td>

|

||||

<td id="cpu-usage">-</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>Oldest Event</td>

|

||||

<td id="oldest-event">-</td>

|

||||

@@ -175,25 +206,15 @@

|

||||

<!-- SUBSCRIPTION DETAILS Section (Admin Only) -->

|

||||

<div class="section flex-section" id="subscriptionDetailsSection" style="display: none;">

|

||||

<div class="section-header">

|

||||

<h2>ACTIVE SUBSCRIPTION DETAILS</h2>

|

||||

ACTIVE SUBSCRIPTION DETAILS

|

||||

</div>

|

||||

|

||||

<div class="input-group">

|

||||

<div class="config-table-container">

|

||||

<table class="config-table" id="subscription-details-table">

|

||||

<thead>

|

||||

<tr>

|

||||

<th>Subscription ID</th>

|

||||

<th>Client IP</th>

|

||||

<th>Duration</th>

|

||||

<th>Events Sent</th>

|

||||

<th>Status</th>

|

||||

<th>Filters</th>

|

||||

</tr>

|

||||

</thead>

|

||||

<tbody id="subscription-details-table-body">

|

||||

<tr>

|

||||

<td colspan="6" style="text-align: center; font-style: italic;">No subscriptions active</td>

|

||||

<td colspan="4" style="text-align: center; font-style: italic;">No subscriptions active</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

@@ -203,7 +224,9 @@

|

||||

|

||||

<!-- Testing Section -->

|

||||

<div id="div_config" class="section flex-section" style="display: none;">

|

||||

<h2>RELAY CONFIGURATION</h2>

|

||||

<div class="section-header">

|

||||

RELAY CONFIGURATION

|

||||

</div>

|

||||

<div id="config-display" class="hidden">

|

||||

<div class="config-table-container">

|

||||

<table class="config-table" id="config-table">

|

||||

@@ -230,7 +253,7 @@

|

||||

<!-- Auth Rules Management - Moved after configuration -->

|

||||

<div class="section flex-section" id="authRulesSection" style="display: none;">

|

||||

<div class="section-header">

|

||||

<h2>AUTH RULES MANAGEMENT</h2>

|

||||

AUTH RULES MANAGEMENT

|

||||

</div>

|

||||

|

||||

<!-- Auth Rules Table -->

|

||||

@@ -292,7 +315,7 @@

|

||||

</div>

|

||||

|

||||

<!-- Outbox -->

|

||||

<div class="input-group">

|

||||

<div>

|

||||

<label for="dm-outbox">Send Message to Relay:</label>

|

||||

<textarea id="dm-outbox" rows="4" placeholder="Enter your message to send to the relay..."></textarea>

|

||||

</div>

|

||||

@@ -311,6 +334,72 @@

|

||||

</div>

|

||||

</div>

|

||||

|

||||

<!-- RELAY EVENTS Section -->

|

||||

<div class="section" id="relayEventsSection" style="display: none;">

|

||||

<div class="section-header">

|

||||

RELAY EVENTS MANAGEMENT

|

||||

</div>

|

||||

|

||||

<!-- Kind 0: User Metadata -->

|

||||

<div class="input-group">

|

||||

<h3>Kind 0: User Metadata</h3>

|

||||

<div class="form-group">

|

||||

<label for="kind0-name">Name:</label>

|

||||

<input type="text" id="kind0-name" placeholder="Relay Name">

|

||||

</div>

|

||||

<div class="form-group">

|

||||

<label for="kind0-about">About:</label>

|

||||

<textarea id="kind0-about" rows="3" placeholder="Relay Description"></textarea>

|

||||

</div>

|

||||

<div class="form-group">

|

||||

<label for="kind0-picture">Picture URL:</label>

|

||||

<input type="url" id="kind0-picture" placeholder="https://example.com/logo.png">

|

||||

</div>

|

||||

<div class="form-group">

|

||||

<label for="kind0-banner">Banner URL:</label>

|

||||

<input type="url" id="kind0-banner" placeholder="https://example.com/banner.png">

|

||||

</div>

|

||||

<div class="form-group">

|

||||

<label for="kind0-nip05">NIP-05:</label>

|

||||

<input type="text" id="kind0-nip05" placeholder="relay@example.com">

|

||||

</div>

|

||||

<div class="form-group">

|

||||

<label for="kind0-website">Website:</label>

|

||||

<input type="url" id="kind0-website" placeholder="https://example.com">

|

||||

</div>

|

||||

<div class="inline-buttons">

|

||||

<button type="button" id="submit-kind0-btn">UPDATE METADATA</button>

|

||||

</div>

|

||||

<div id="kind0-status" class="status-message"></div>

|

||||

</div>

|

||||

|

||||

<!-- Kind 10050: DM Relay List -->

|

||||

<div class="input-group">

|

||||

<h3>Kind 10050: DM Relay List</h3>

|

||||

<div class="form-group">

|

||||

<label for="kind10050-relays">Relay URLs (one per line):</label>

|

||||

<textarea id="kind10050-relays" rows="4" placeholder="wss://relay1.com wss://relay2.com"></textarea>

|

||||

</div>

|

||||

<div class="inline-buttons">

|

||||

<button type="button" id="submit-kind10050-btn">UPDATE DM RELAYS</button>

|

||||

</div>

|

||||

<div id="kind10050-status" class="status-message"></div>

|

||||

</div>

|

||||

|

||||

<!-- Kind 10002: Relay List -->

|

||||

<div class="input-group">

|

||||

<h3>Kind 10002: Relay List</h3>

|

||||

<div id="kind10002-relay-entries">

|

||||

<!-- Dynamic relay entries will be added here -->

|

||||

</div>

|

||||

<div class="inline-buttons">

|

||||

<button type="button" id="add-relay-entry-btn">ADD RELAY</button>

|

||||

<button type="button" id="submit-kind10002-btn">UPDATE RELAYS</button>

|

||||

</div>

|

||||

<div id="kind10002-status" class="status-message"></div>

|

||||

</div>

|

||||

</div>

|

||||

|

||||

<!-- SQL QUERY Section -->

|

||||

<div class="section" id="sqlQuerySection" style="display: none;">

|

||||

<div class="section-header">

|

||||

|

||||

1440

api/index.js

@@ -18,6 +18,7 @@ class ASCIIBarChart {

|

||||

* @param {boolean} [options.useBinMode=false] - Enable time bin mode for data aggregation

|

||||

* @param {number} [options.binDuration=10000] - Duration of each time bin in milliseconds (10 seconds default)

|

||||

* @param {string} [options.xAxisLabelFormat='elapsed'] - X-axis label format: 'elapsed', 'bins', 'timestamps', 'ranges'

|

||||

* @param {boolean} [options.debug=false] - Enable debug logging

|

||||

*/

|

||||

constructor(containerId, options = {}) {

|

||||

this.container = document.getElementById(containerId);

|

||||

@@ -29,6 +30,7 @@ class ASCIIBarChart {

|

||||

this.xAxisLabel = options.xAxisLabel || '';

|

||||

this.yAxisLabel = options.yAxisLabel || '';

|

||||

this.autoFitWidth = options.autoFitWidth !== false; // Default to true

|

||||

this.debug = options.debug || false; // Debug logging option

|

||||

|

||||

// Time bin configuration

|

||||

this.useBinMode = options.useBinMode !== false; // Default to true

|

||||

@@ -61,21 +63,10 @@ class ASCIIBarChart {

|

||||

* @param {number} value - The numeric value to add

|

||||

*/

|

||||

addValue(value) {

|

||||

if (this.useBinMode) {

|

||||

// Time bin mode: increment count in current active bin

|

||||

// Time bin mode: add value to current active bin count

|

||||

this.checkBinRotation(); // Ensure we have an active bin

|

||||

this.bins[this.currentBinIndex].count++;

|

||||

this.bins[this.currentBinIndex].count += value; // Changed from ++ to += value

|

||||

this.totalDataPoints++;

|

||||

} else {

|

||||

// Legacy mode: add individual values

|

||||

this.data.push(value);

|

||||

this.totalDataPoints++;

|

||||

|

||||

// Keep only the most recent data points

|

||||

if (this.data.length > this.maxDataPoints) {

|

||||

this.data.shift();

|

||||

}

|

||||

}

|

||||

|

||||

this.render();

|

||||

this.updateInfo();

|

||||

@@ -119,7 +110,7 @@ class ASCIIBarChart {

|

||||

const totalWidth = yAxisPadding + yAxisNumbers + separator + dataWidth + padding;

|

||||

|

||||

// Only log when width changes

|

||||

if (this.lastChartWidth !== totalWidth) {

|

||||

if (this.debug && this.lastChartWidth !== totalWidth) {

|

||||

console.log('getChartWidth changed:', { dataLength, totalWidth, previous: this.lastChartWidth });

|

||||

this.lastChartWidth = totalWidth;

|

||||

}

|

||||

@@ -142,7 +133,7 @@ class ASCIIBarChart {

|

||||

// Calculate optimal font size

|

||||

// For monospace fonts, character width is approximately 0.6 * font size

|

||||

// Use a slightly smaller ratio to fit more content

|

||||

const charWidthRatio = 0.6;

|

||||

const charWidthRatio = 0.7;

|

||||

const padding = 30; // Reduce padding to fit more content

|

||||

const availableWidth = containerWidth - padding;

|

||||

const optimalFontSize = Math.floor((availableWidth / chartWidth) / charWidthRatio);

|

||||

@@ -151,7 +142,7 @@ class ASCIIBarChart {

|

||||

const fontSize = Math.max(4, Math.min(20, optimalFontSize));

|

||||

|

||||

// Only log when font size changes

|

||||

if (this.lastFontSize !== fontSize) {

|

||||

if (this.debug && this.lastFontSize !== fontSize) {

|

||||

console.log('fontSize changed:', { containerWidth, chartWidth, fontSize, previous: this.lastFontSize });

|

||||

this.lastFontSize = fontSize;

|

||||

}

|

||||

@@ -190,7 +181,9 @@ class ASCIIBarChart {

|

||||

}

|

||||

});

|

||||

|

||||

if (this.debug) {

|

||||

console.log('render() dataToRender:', dataToRender, 'bins length:', this.bins.length);

|

||||

}

|

||||

maxValue = Math.max(...dataToRender);

|

||||

minValue = Math.min(...dataToRender);

|

||||

valueRange = maxValue - minValue;

|

||||

@@ -243,8 +236,8 @@ class ASCIIBarChart {

|

||||

}

|

||||

}

|

||||

|

||||

// Calculate the actual count value this row represents (0 at bottom, increasing upward)

|

||||

const rowCount = (row - 1) * scaleFactor;

|

||||

// Calculate the actual count value this row represents (1 at bottom, increasing upward)

|

||||

const rowCount = (row - 1) * scaleFactor + 1;

|

||||

|

||||

// Add Y-axis label (show actual count values)

|

||||

line += String(rowCount).padStart(3, ' ') + ' |';

|

||||

|

||||

@@ -1,3 +1,19 @@

|

||||

#!/bin/bash

|

||||

|

||||

# Copy the binary to the deployment location

|

||||

cp build/c_relay_x86 ~/Storage/c_relay/crelay

|

||||

|

||||

# Copy the service file to systemd (use the main service file)

|

||||

sudo cp systemd/c-relay.service /etc/systemd/system/c-relay-local.service

|

||||

|

||||

# Reload systemd daemon to pick up the new service

|

||||

sudo systemctl daemon-reload

|

||||

|

||||

# Enable the service (if not already enabled)

|

||||

sudo systemctl enable c-relay-local.service

|

||||

|

||||

# Restart the service

|

||||

sudo systemctl restart c-relay-local.service

|

||||

|

||||

# Show service status

|

||||

sudo systemctl status c-relay-local.service --no-pager -l

|

||||

|

||||

@@ -175,6 +175,18 @@ Configuration events follow the standard Nostr event format with kind 33334:

|

||||

- **Impact**: Allows some flexibility in expiration timing

|

||||

- **Example**: `"600"` (10 minute grace period)

|

||||

|

||||

### NIP-59 Gift Wrap Timestamp Configuration

|

||||

|

||||

#### `nip59_timestamp_max_delay_sec`

|

||||

- **Description**: Controls timestamp randomization for NIP-59 gift wraps

|

||||

- **Default**: `"0"` (no randomization)

|

||||

- **Range**: `0` to `604800` (7 days)

|

||||

- **Impact**: Affects compatibility with other Nostr clients for direct messaging

|

||||

- **Values**:

|

||||

- `"0"`: No randomization (maximum compatibility)

|

||||

- `"1-604800"`: Random timestamp between now and N seconds ago

|

||||

- **Example**: `"172800"` (2 days randomization for privacy)

|

||||

|

||||

## Configuration Examples

|

||||

|

||||

### Basic Relay Setup

|

||||

|

||||

298

docs/libwebsockets_proper_pattern.md

Normal file

@@ -0,0 +1,298 @@

|

||||

# Libwebsockets Proper Pattern - Message Queue Design

|

||||

|

||||

## Problem Analysis

|

||||

|

||||

### Current Violation

|

||||

We're calling `lws_write()` directly from multiple code paths:

|

||||

1. **Event broadcast** (subscriptions.c:667) - when events arrive

|

||||

2. **OK responses** (websockets.c:855) - when processing EVENT messages

|

||||

3. **EOSE responses** (websockets.c:976) - when processing REQ messages

|

||||

4. **COUNT responses** (websockets.c:1922) - when processing COUNT messages

|

||||

|

||||

This violates libwebsockets' design pattern which requires:

|

||||

- **`lws_write()` ONLY called from `LWS_CALLBACK_SERVER_WRITEABLE`**

|

||||

- Application queues messages and requests writeable callback

|

||||

- Libwebsockets handles write timing and socket buffer management

|

||||

|

||||

### Consequences of Violation

|

||||

1. Partial writes when socket buffer is full

|

||||

2. Multiple concurrent write attempts before callback fires

|

||||

3. "write already pending" errors with single buffer

|

||||

4. Frame corruption from interleaved partial writes

|

||||

5. "Invalid frame header" errors on client side

|

||||

|

||||

## Correct Architecture

|

||||

|

||||

### Message Queue Pattern

|

||||

|

||||

```

|

||||

┌─────────────────────────────────────────────────────────────┐

|

||||

│ Application Layer │

|

||||

├─────────────────────────────────────────────────────────────┤

|

||||

│ │

|

||||

│ Event Arrives → Queue Message → Request Writeable Callback │

|

||||

│ REQ Received → Queue EOSE → Request Writeable Callback │

|

||||

│ EVENT Received→ Queue OK → Request Writeable Callback │

|

||||

│ COUNT Received→ Queue COUNT → Request Writeable Callback │

|

||||

│ │

|

||||

└─────────────────────────────────────────────────────────────┘

|

||||

↓

|

||||

lws_callback_on_writable(wsi)

|

||||

↓